“Democratizing” AI is like “democratizing” a tank. Sure, we can make it open-source … or even let civilians become tank commanders. But a tank is still a tank.1

– Evgeny Morozov, technology critic

Having AI servants will make everything easier for adults. Having AI servants will make everything easier for children, too, who will then not learn to do anything hard.2

–Jonathan Haidt, social psychologist

Abstract

The growth of new technology, in particular new communication technology, has raised questions about technology’s role in society. Critics argue that it has increased hate speech, polarized the electorate, reduced deliberation, and coarsened the discourse. Others have emphasized the democratizing potential of tools facilitating collective action and the potential for new exchange of ideas. To better understand citizens’ general orientation toward technology, we develop a new anti-technology scale and test it on two diverse samples of Americans. Our scale measures three distinct areas of anti-technology attitudes: 1) attitudes toward social media, 2) attitudes toward artificial intelligence, and 3) concerns about modernity. We show that these areas form a general, latent anti-technology orientation. We then show that this general anti-tech orientation predicts attitudes toward technology policies and support for contentious actions against tech companies. Finally, we use a pairwise comparison experiment to understand which pro- and anti-AI arguments are most persuasive.

Introduction

Concerns about new technology, similar to those expressed by Morozov and Haidt, are long-standing.3 Many observers believe that technology makes users cognitively lazy and increases mental health problems.4 Other experts have warned that the recent arrival of powerful large language models (LLMs) like ChatGPT, and future increased capabilities of artificial intelligence (AI), will have negative consequences for workers by taking jobs, and will increase misinformation.5 Pew Research polls further show that Americans are more “concerned” than “excited” about AI, and YouGov survey data examined by the AI Policy Institute shows that 60 percent of Americans feel “AI will undermine meaning in our lives by automating human work, making humans less useful and relevant, and weakening our social bonds.”6 A growing chorus of voices argues that the business models or products of digital tech are fundamentally at odds with liberal democratic values.7 In addition, media and business personalities with a large following, such as Elon Musk and Tucker Carlson, have spoken favorably about Ted Kaczynski, the Unabomber and author of an anti-technology manifesto.8

Although various risks of AI are currently speculative, arguments that social media use is dangerous for children and teenagers and that social media companies prioritize profit over the safety and well-being of its users have resonated with citizens for some time.9 Recent public opinion polls reflect this antipathy, showing a decline in trust toward social media companies, with Americans increasingly concerned about surveillance, misinformation, and the cyber risks posed by the increasing use of technology.10 A survey on confidence in institutions and support for democracy looked at different American companies and institutions between 2018 and 2021, and found that tech companies such as Facebook, Amazon, and Google experienced the steepest drop in trust.11 While scholars disagree about the impact of dubious or fake content on voters, most experts believe social media has exacerbated the spread of misinformation.12The growing concern over the impact of technology on society raises the question: what determines attitudes toward technology? In this paper, we measure anti-technology sentiment among Americans, test its predictive power in explaining policy preferences and contentious political behavior, and discuss its political implications.

Previous research has focused on individual attitudes toward specific technologies such as social media, artificial intelligence, and robots, as well as on people’s fluency or comfort with technology.13 Researchers have also investigated trust in Western medicine and explored whether trust in folk medicine predicts anti-expert attitudes.14 Further research finds that anti-tech sentiment, particularly toward social media companies, is not a solely motivated by partisanship: large majorities of both Democrats and Republicans feel that social media companies have too much power and influence, believe “Big Tech” is a problem for the US economy, and favor breaking up the biggest tech companies.15 However, existing research does not distinguish whether these various components of anti-technology attitudes are distinct or represent a shared anti-technology orientation.

Previous research has documented the widespread economic benefits of new technologies on standards of living and welfare.16 But technology does not just influence the economy. It also influences culture and society, a core issue for much of sociology and political science. Modernization and associated theories argue that shifts in technology can help countries become wealthier and more advanced, and more likely to become democracies.17 Thus, new technology provides a virtuous cycle of more wealth, more stability, and more democracy. Yet other sociological theories argue that advances in technology can lead to a breakdown of social ties, create disorder, and foster alienation.18 These competing effects of new technology are at the heart of the tension between modernization theories and sociological theories.

All of this suggests three things about anti-technology sentiment: 1) that it is an important and possibly growing phenomenon; 2) that it is core to many important political and sociological theories; but 3) that it has been undertheorized and frequently assessed using limited metrics.

Using a pair of surveys and an experiment, we take an empirical approach to measure the contours of anti-technology sentiment among Americans. First, we measure three distinct anti-technology components: 1) negative attitudes toward social media , 2) fears over AI, and 3) a negative view of modernity. We show that these anti-technology components form an anti-tech disposition, which is relatively common among Americans. For instance, a majority of our survey respondents agree that “technology has taken over our lives” or that social media harms young users and fuels envy and social comparisons. Over half of respondents also fear that AI could hurt humans. Psychological variables including loneliness and conspiracism are associated with stronger anti-tech sentiment. Partisanship plays a modest role as well (skepticism of technology and modernity is marginally more common among Republicans than Democrats). Unlike partisanship, the anti-tech orientation predicts support for breaking up big technology companies. We also find a positive association between anti-tech attitudes and endorsing or excusing (hypothetical) extremist anti-tech behavior. Finally, using a pairwise survey experiment, we show that positive and negative sentiment toward AI is malleable.

In doing so, our paper represents a new and important contribution. Surveys that have previously focused on technology usually focus on a few key flavors of anti-technology (e.g., social media or AI) or negative implications of new technology (e.g., job losses).19 We take a comprehensive approach to anti-technology attitudes and explore their structure and implications.

Having established the context and importance of anti-technology sentiment, we now turn to our theoretical framework and expectations. Drawing from existing literature on social stress, political alienation, and technological change, we propose several hypotheses about the nature and consequences of anti-tech attitudes.

Theoretical Expectations

What explains core attitudes toward technology? Central to this question are the social effects of new innovations. Technological advances can create social stress and upheaval.20 Others argue that new technology is a form of social control.21 New technologies create new markets and upend economies, reshaping society. For instance, the data-driven economies of the web have individual companies competing for individual eyeballs and attention, all while consumers provide their own data in exchange for access to the new media applications.22 As Acemoglu and Johnson argue, new technologies generate new wealth and advances, but it is not uncommon for elites to capture these benefits, leading to social and economic marginalization for ordinary citizens.23 So, while new technologies lead to more wealth and increase standards of living, they also create new winners and losers and, subsequently, social tensions.

New communication technologies have always engendered critics. For instance, in the 1970s, prominent environmental and anti-technology activist, Jerry Mander called for the abandoning of television, believing it hopelessly irredeemable.24 He famously said, “To speak of television as ‘neutral’ and therefore subject to change is as absurd as speaking of the reform of a technology such as guns.”25 Similar critiques have been leveled against social media, with some arguing that its underpinnings of social validation, virality, and polarizing content are not only addictive but also harmful—to human interactions generally and to young adolescents and teenagers especially.26 Others suggest that social media, while potentially helping activists and the voiceless organize, also can polarize the populace, lead to echo chambers, facilitate the worst political impulses with hate speech, and empower authoritarian governments.27

The arrival of new technology can also increase political alienation. This occurs when individuals feel isolated, lonely, angry at the status quo, and estranged from the current political system.28 Conspiracism and anti-establishment politics are important side effects of alienation, currently forming a cleavage in American politics, which is separate from the left-right ideology and orientation.29 And several studies show how feelings of alienation, conspiracism, and anti-establishment politics are related to support for political violence.30 Some researchers find that the economic dislocation associated with automation can also influence voting behavior, while others, find limited effects of automation threats on political preferences.31 Perhaps most concerning is that automation effects of AI could greatly increase inequality and thus corrode democracy.32

In sum, as new technology increases social stress and upheaval, certain people may be skeptical of the benefits of this new technology. And, we argue that there is a connection between new technology, political alienation, and anti-system politics. These observations form the basis for our preregistered hypotheses.

We focus on four questions in particular. First, is there a general anti-technological predisposition? In other words, do Americans blame technology for specific social problems, including nasty politics, loneliness, erosion of privacy, or the circulation of hate and misinformation? Second, what are the correlates of this anti-tech orientation? Are lonely individuals and those who harbor resentments against “the system” more critical of technology in general? Third, are people who score higher on this anti-tech orientation more likely to support regulation on technology companies and to approve of citizen-led violence against representatives of such companies? Lastly, which arguments in favor of AI adoption (if any) are viewed by citizens as persuasive?

Our expectations are as follows:

→ A general anti-tech orientation exists and will emerge from a factor analysis of questions related to concerns about social media, fear of AI, negative sentiment about modernity, and unfavorable or skeptical attitudes toward Western medicine.

→ Higher scores on the anti-tech orientation will be associated with loneliness and conspiracism.

→ Anti-tech orientation attitudes will predict support of regulatory policies (independently of partisanship).

→ Respondents who score higher on the anti-tech orientation will be more forgiving of a hypothetical violent act or perpetrator. In addition, approval of violence against leadership of a tech company will be higher among respondents who exhibit higher need for chaos relative to those with lower anti-systemic views.

Data

In July 2023, we conducted our first survey, recruiting respondents via the Lucid/Cint platform and collecting responses from a nationally diverse sample of seven hundred US adults (dataset 1, fielded July 24–25, 2023). We followed up the first study with a nationally representative poll in collaboration with YouGov (dataset 2; N=1,350, fielded between September 26 and October 2, 2023). In the first survey, we fielded a larger number of anti-tech constructs and their correlates to explore the contours of the anti-tech scale. The second survey is slightly more parsimonious (and contains nineteen questions tapping into respondents’ technology-related attitudes). Our research questions and selected expectations were preregistered before fielding the first survey.33

Our respondents were presented with a series of five-point (strongly disagree to strongly agree) questions that aimed to gauge their attitudes toward technology. These questions were grouped into four main clusters in the pilot study: 1) evaluations of social media platforms’ effects on society, health, and politics; 2) fear or optimism about AI; 3) views on modernity; and 4) questions capturing perceptions about the efficacy and trustworthiness of Western medicine versus alternative medical practices. The pilot study confirmed that the “Western versus Traditional Medicine”set of questions represented a separate factor from the remaining questions,and questions about Western medicine were therefore not asked on the YouGov survey, given our main focus on the anti-technology attitudes.

The list below summarizes the questions we posed to our respondents:

- Social Media (eight questions): This category aimed to capture respondents’ perceptions of the impact of social media on society and on themselves. For instance, respondents were asked to consider statements such as “Social media has mostly been a bad thing for society because it spreads hate and misinformation” and “The reason our politics is so nasty is because of social media.”

- Artificial Intelligence (six questions in the YouGov dataset, four questions in the Lucid dataset): This segment probed respondents’ perspectives on AI and its implications. Sample questions from this category include the following: “I am worried that scientists are designing computer programs that could hurt humans;” “Computers and machines can help us do tasks that are too boring for humans to do;” “Artificial intelligence should not be allowed in schools;” and “The extinction of the human race due to AI is a real possibility.”

- Modernity (five questions): Here, we sought to understand the sentiments toward the broader theme of modern technological advancements and their implications on human intelligence, behaviors, and lifestyles. Examples from this section include the following: “Our reliance on machines and technology has made us less smart;” “All this modern technology prevents us from living in harmony with nature;” and “We have let modern technology like smartphones take over our lives.”

- Western versus Traditional Medicine (four questions; Study 1 only): Respondents were presented with statements such as “I trust alternative medical practices like homeopathy, acupuncture, Reiki, and herbal supplements more than modern Western medicine” or “Big pharmaceutical companies are secretly hiding cures for diseases like cancer.”

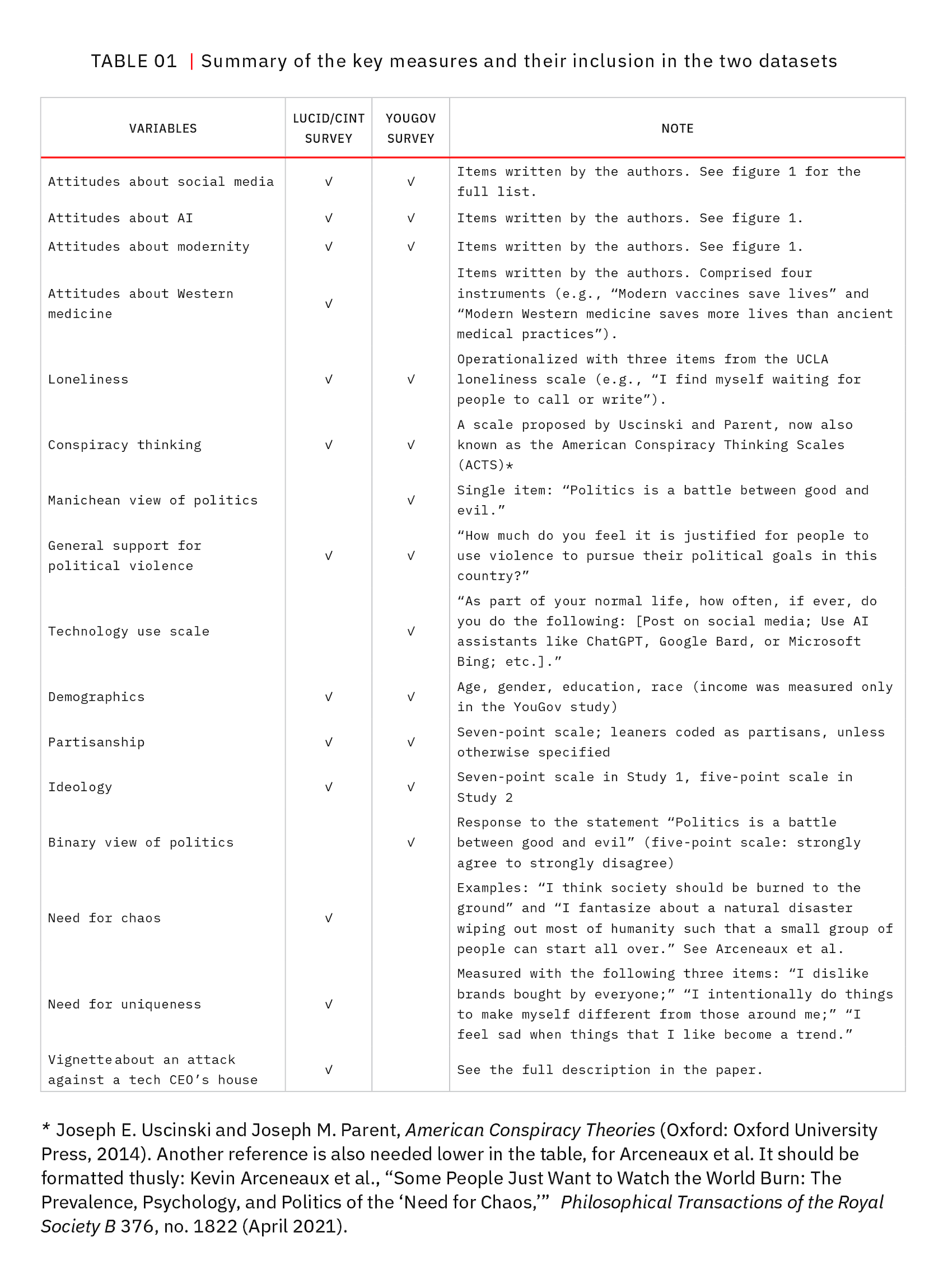

The full set of nineteen close-ended items we asked in the YouGov survey and the distribution of responses is displayed in figure 1. The wording of all questions is provided in the supporting information appendix (SI), and the distributions of responses from dataset 1 are shown in figure S.3.

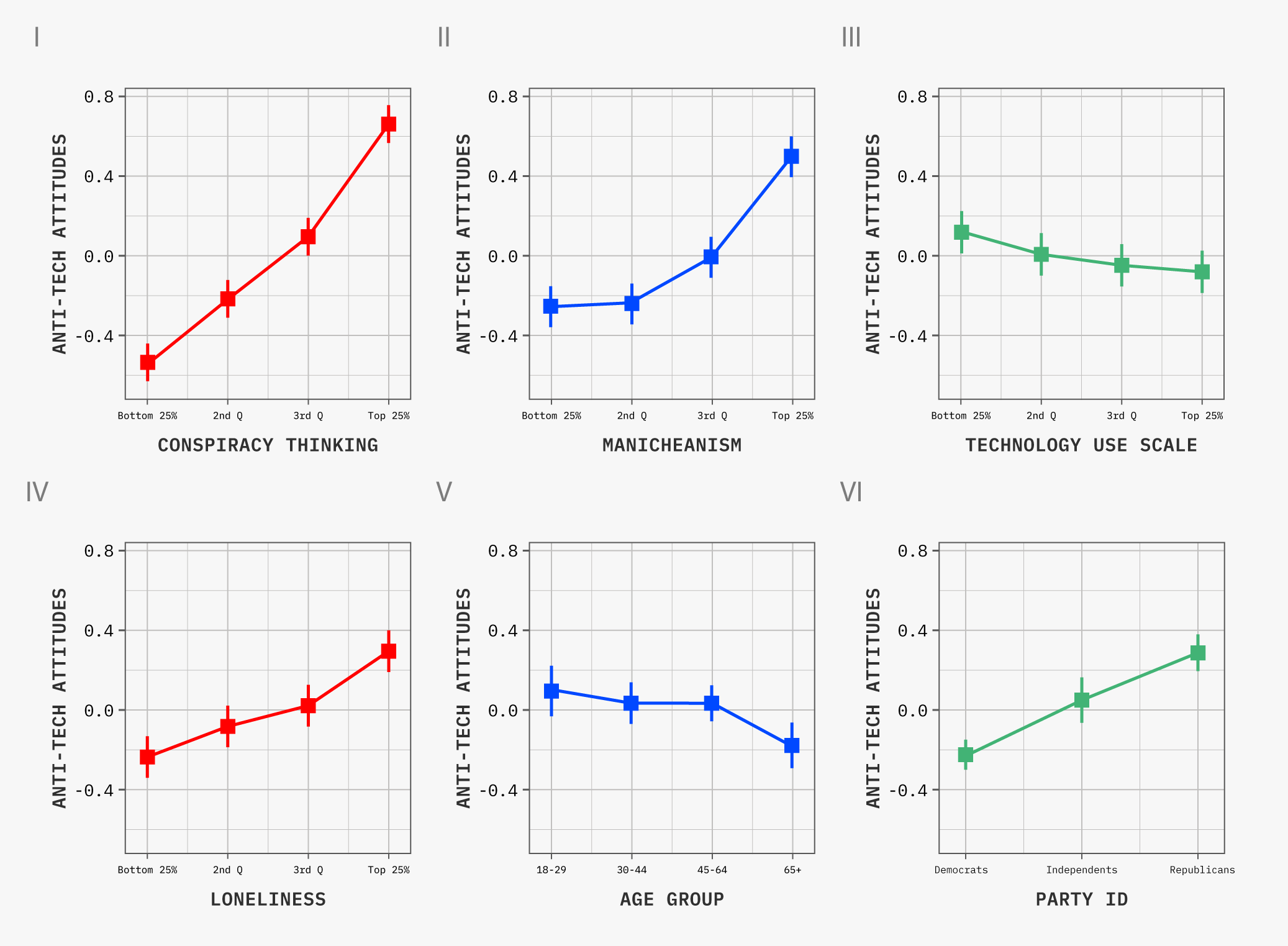

The components of the theorized anti-tech attitudes are also listed in table 1, with checkmarks indicating whether a given set of variables was included in a specific dataset. The table also contains the main independent variables that were measured and indicators of their inclusion across surveys.34

Outcome Variables

In addition to quantifying the prevalence and structure of anti-technology attitudes, our second objective is to explain policy preferences and views on politically controversial innovations such as facial recognition (when used by the police) or algorithms deployed by social media platforms to identify false information. We measured preferences by posing direct prompts to our respondents:

- On regulating AI: “How much do you agree or disagree with the following statement? Research on artificial intelligence should be heavily regulated by the government.” (Scale of 1–5: strongly agree to strongly disagree)

- On breaking up Big Tech: “Some people think that big tech companies like Google, Amazon, Apple, Meta/Facebook, and Microsoft are too powerful and should be broken up, while others say that big tech companies should not be broken up because it will hurt innovation and harm customers. Which comes closer to your views on big tech companies?” (Binary response scale: “Break up Big Tech” or “Do not break up Big Tech”)

- The following is a list of new potential technologies. Would the widespread use of these technologies be a good or bad thing for society? (Binary response: “A good idea for society” or “A bad idea for society”)

→ Facial recognition by the police to spot criminals in crowds

→ Automated computer programs by social media companies to find false information

→ Robots that replace cashiers, cleaners, and cooks

→ Cars that are completely operated by computers and don’t need humans to

drive

→ A computer chip implanted in the brain that allows people to process information faster and more accurately

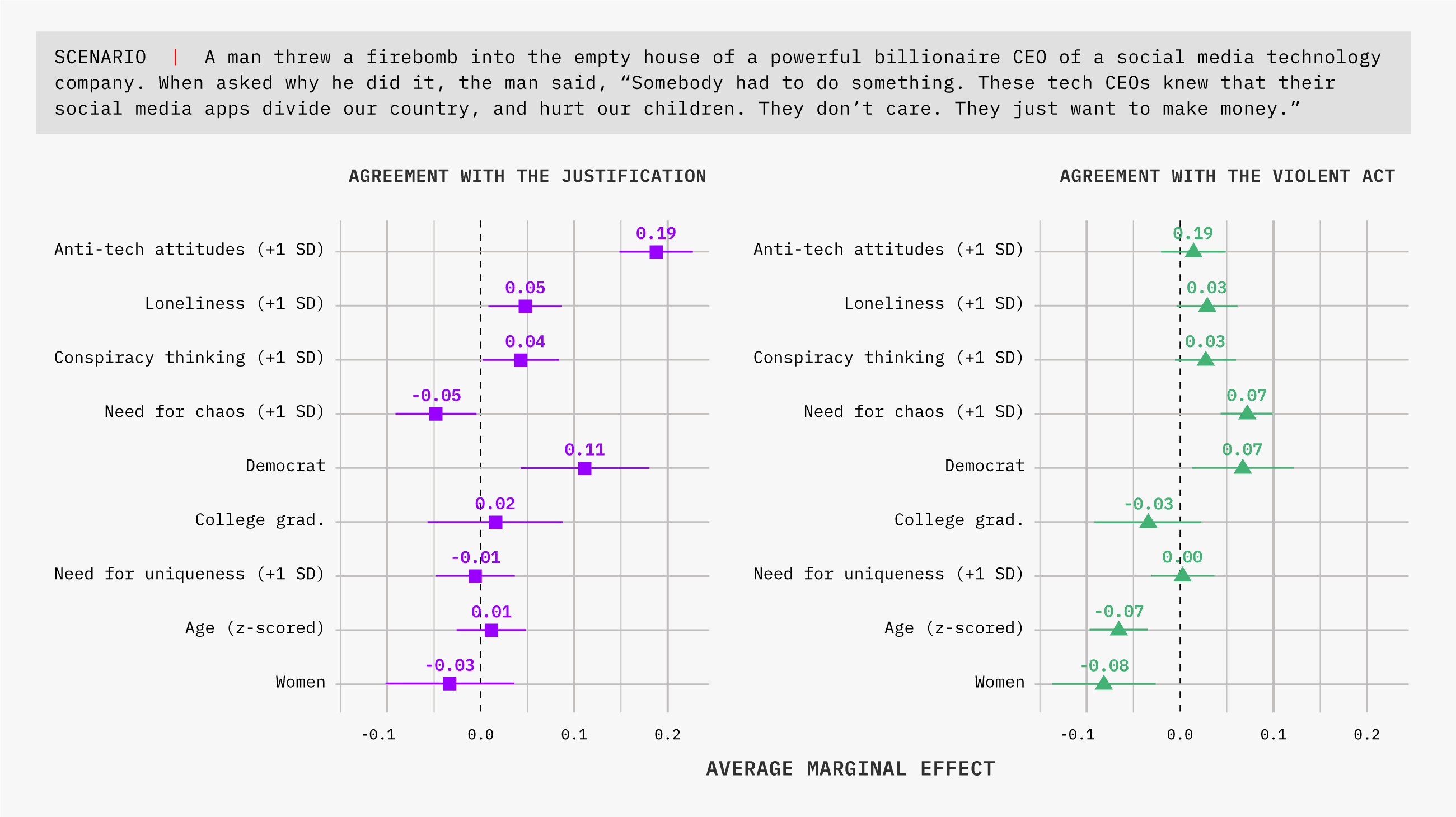

Next, we wanted to understand if, and to what extent, certain technological apprehensions may translate into support, sympathy, or excusing of extremist anti-tech views and actions. To that end, the following outcome variables (measured in dataset 1 only) aimed to measure evaluations of the views and actions of an anti-tech terrorist, who was described with the following vignette:

A man threw a firebomb into the empty house of a powerful billionaire CEO of a social media technology company. When asked why he did it, the man said, “Somebody had to do something. These tech CEOs knew that their social media apps divide our country and hurt our children. They don’t care. They just want to make money.”

After reading about the hypothetical scenario, respondents were asked to answer the following set of questions:

- On support for violence: “How much do you support or oppose the man’s actions?” (Scale of 1–4: strongly oppose to strongly support, with no neutral option provided)

- On agreement with the letter: “Regardless of whether you agree or disagree with the man’s actions, how much do you agree or disagree with what the man said?” (Scale of 1–5: strongly agree to strongly disagree, with a neutral option provided in the middle)

- On punishment severity: “How much time in prison, if any, do you think the man should face?” (Eleven options provided, ranging from “No time in prison” to “More than twenty years in prison”)

The modal response to the punishment severity question was “2–5 years in prison,” and approximately 20 percent of respondents indicated that the perpetrator should serve at most thirty days in prison (this proportion includes the 9 percent of respondents who told us that the man should face no time in prison).

Results

We now present the findings from our surveys. Our analysis proceeds in four parts. First, we examine the structure and prevalence of anti-tech attitudes among Americans. Second, we investigate the correlates and predictors of these attitudes. We then explore how anti-tech sentiments relate to policy preferences and support for contentious political actions. Finally, we report results from our persuasiveness experiment.

Study 1: Anti-tech Attitudes

Structure of Anti-tech Attitudes

Our analysis begins by presenting the distribution of responses to the 19 tech-related questions which we asked in the nationally representative YouGov survey, as depicted in figure 1. What immediately stands out from the data is that tech-critical sentiment among respondents is common: significant majorities believe that social media has detrimental effects on children and teenagers, and there is a prevailing notion that modern technology on the whole has excessive influence over our daily lives. The perception that AI advancements will lead to job losses is also widespread. At the same time, most respondents also concurred that modern technology made their lives more convenient, and that social platforms facilitate activism or staying connected to one’s friends and family.

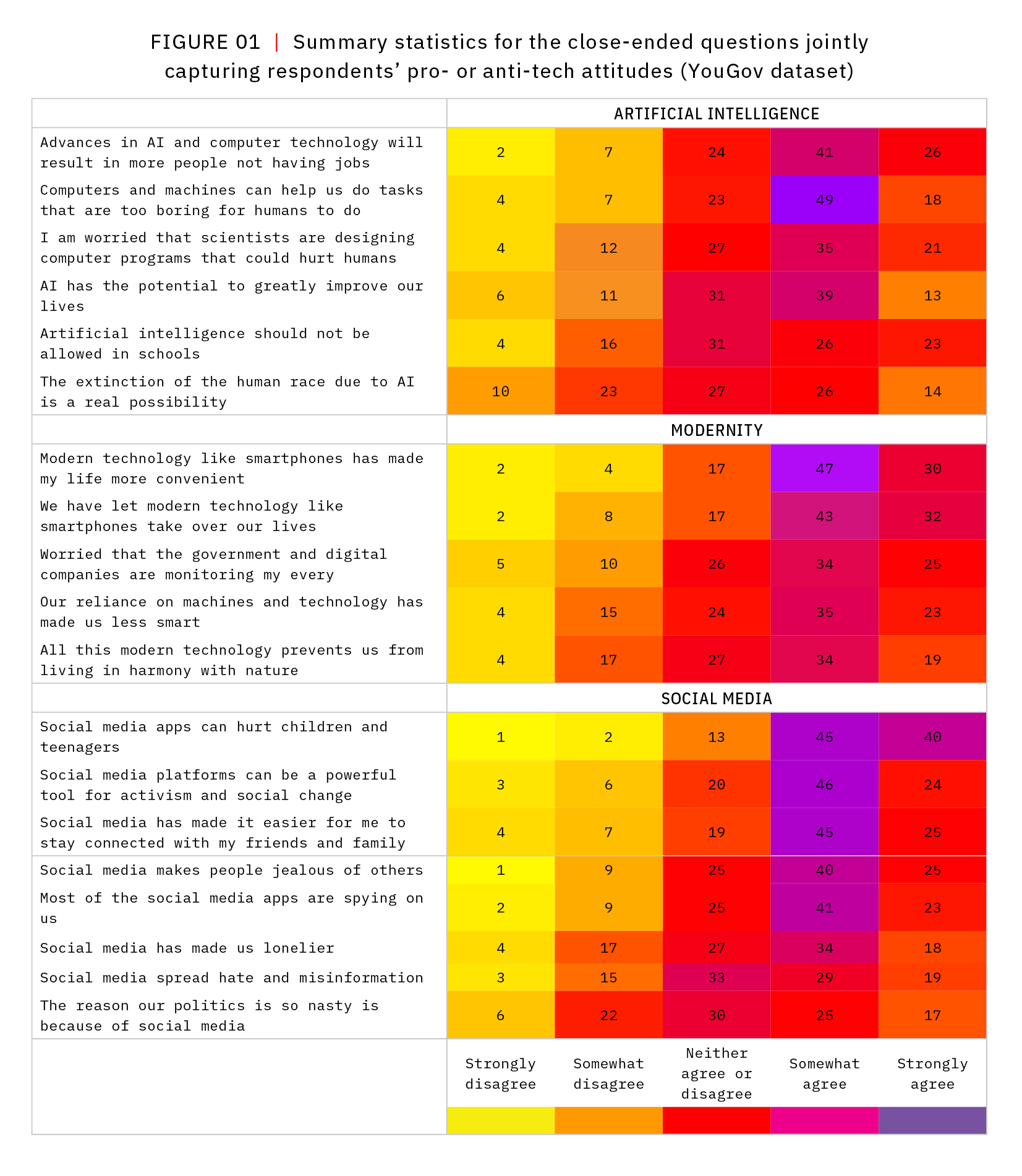

Exploratory factor analysis was used to discern if the meaningful clusters within our dataset were grouped by topics or some other attribute. The only substantive topic that emerged as a separate factor was the set of views of modern versus traditional medicine, measured in the initial survey. In the Lucid dataset, we identified three distinct groupings of variables: first, the dominant factor which emerged—and one we will rely on heavily throughout our analysis—encapsulates general anti-technology orientation. This factor gives us insights into the overarching sentiments of respondents toward AI, social media, and modernity in general. The second factor taps into skepticism of the tangible benefits derived from technology (e.g., respondents disagreeing that social media is a powerful tool for activism, or disagreeing that modern technology made their own lives more convenient).

Meanwhile, the third factor emerged as a preference for traditional folk medicine blended with a skepticism of Western medicine practices (see figure S.4 for factor loadings among the Lucid respondents). Given these initial findings among our Lucid respondents, we posed the same set of questions about social media, AI, and modernity (plus two new items about AI) to YouGov panelists two months later to assess whether the emergence of a first dominant anti-tech factor replicates. Figure 2 shows the results. We see that in general two factors emerge: a general anti-tech orientation (factor 1) and techno-optimism (factor 2). This general anti-tech orientation (factor 1) will be our main factor of focus because it explains 46.6 percent of the variance, its eigenvalue is 4.99, and the Cronbach’s alpha statistic using all nineteen items jointly is 0.85, indicating excellent reliability.

Correlates of Anti-tech Attitudes

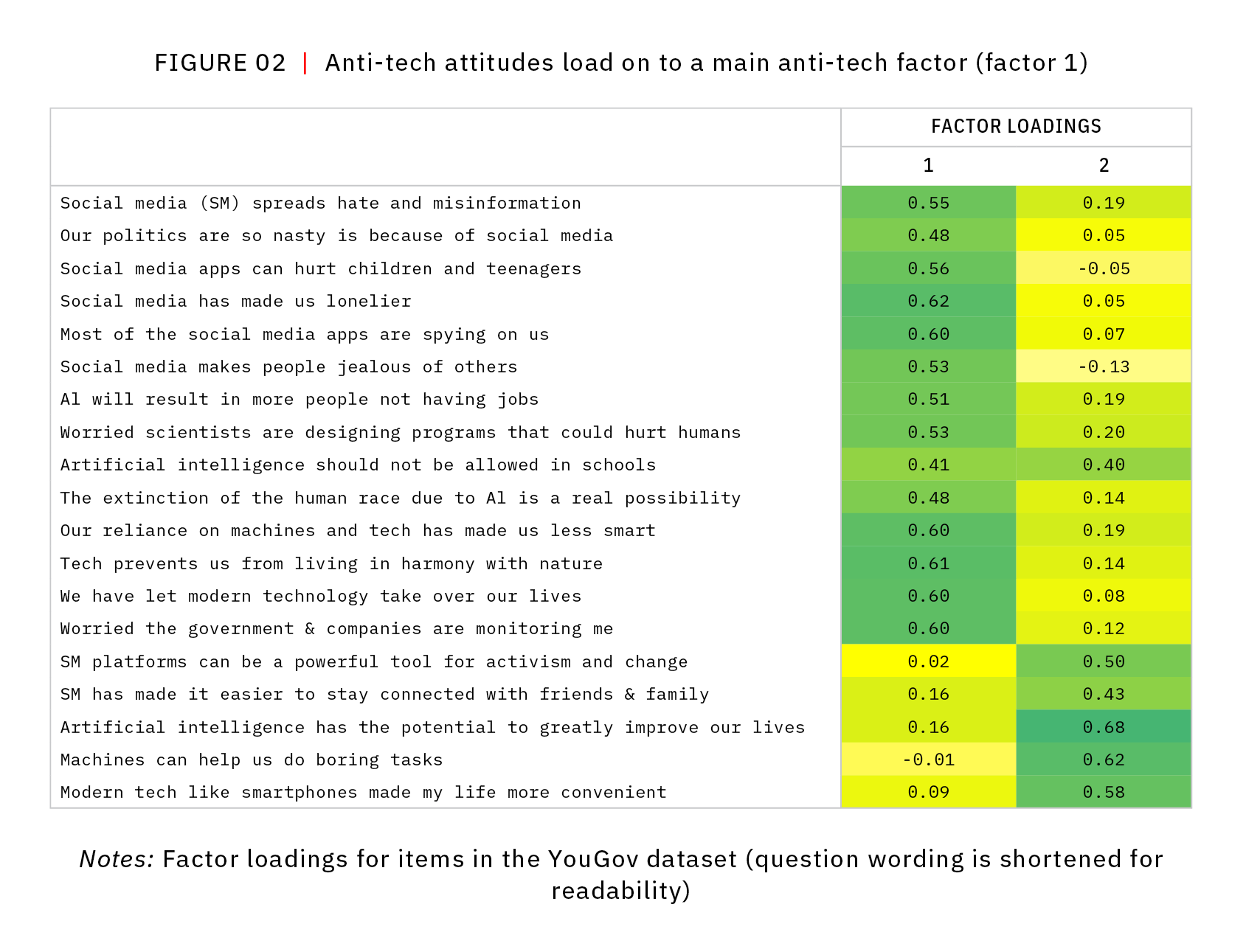

Before turning to models of policy preferences or acceptance of innovations such as self-driving cars or facial recognition (where anti-tech attitudes will serve as the main independent variable), we first treat anti-tech attitudes as a dependent variable to better understand who is more likely to hold these attitudes. We start by plotting the distributions of these attitudes separately for different levels of the main covariates of interest.

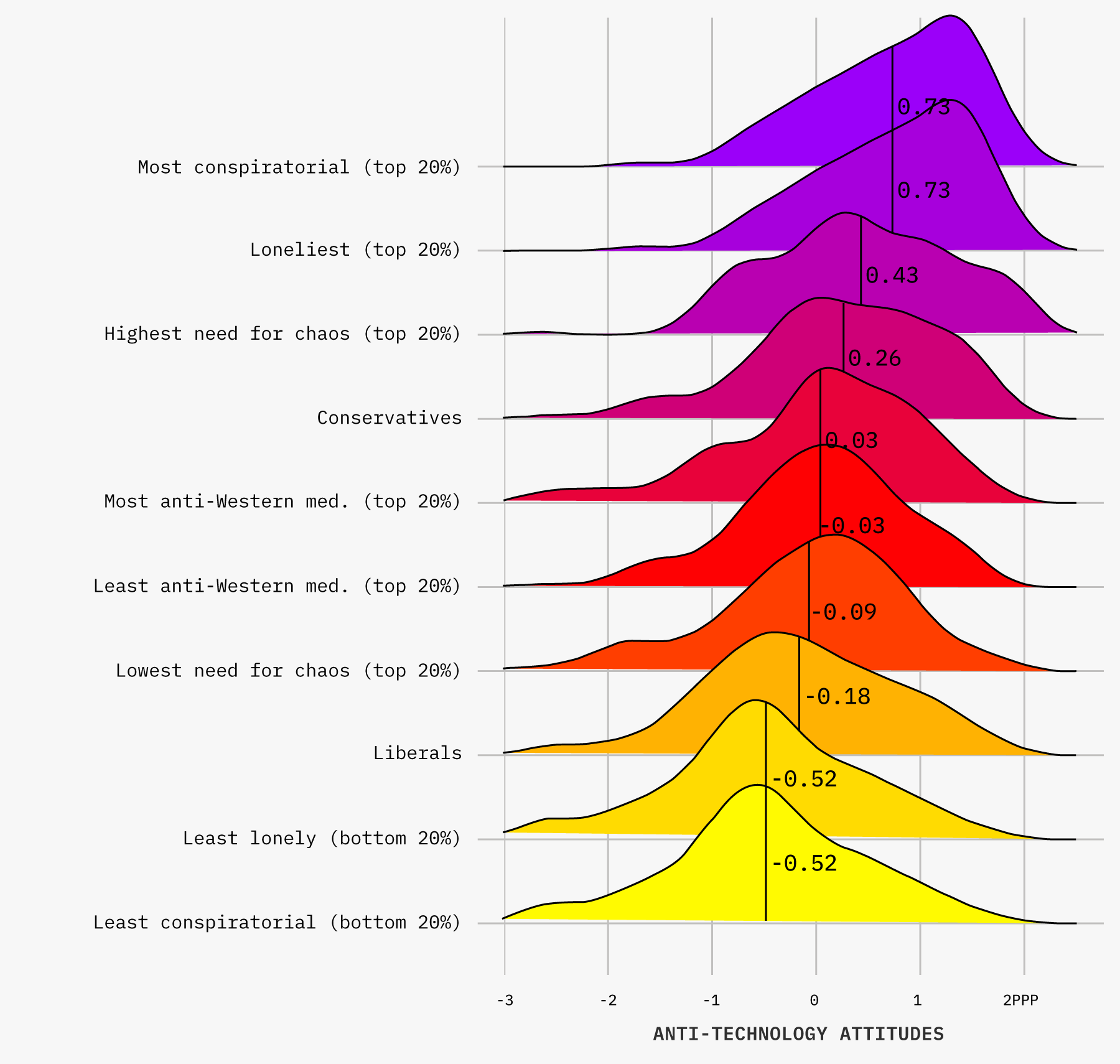

Beginning with conspiracism in the top-left panel of figure 3, we find that (z-scored) anti-tech attitudes are 0.64 among the most conspiracy-inclined quarter of the respondents, and -0.54 among those in bottom quartile (i.e., the relatively pro-establishment respondents). As the slope in that panel indicates, the bivariate association between anti-tech attitudes and conspiracism is stronger compared to other potential predictors. The distance in anti-tech attitudes between the least lonely quarter of respondents and the most lonely quarter is 0.54 standard deviations (bottom-left panel of figure 3), which puts the 1.2 SD gap for conspiracism into perspective. We also see that people who use ChatGPT, smartwatches, social media, and smart home assistants are slightly more pro-technology compared to people who use these products less or do not use them at all (top-right panel in the same figure).

Perhaps surprisingly, the relatively older respondents express lower anti-tech attitudes: on average, Americans who are sixty-five years old or older score 0.16 below the mean level of anti-tech attitudes. We also see that Republicans are relatively more oriented against modern technology than Democrats (the slope is comparable to the slope we saw for the loneliness scale).

Another way to display unadjusted differences across groups of respondents is provided in figure 4. Here we also add participants from the Lucid survey to allow for a pooled comparison across variables of interest. The elevated levels of conspiracism, loneliness, and need for chaos indicate that the average level of anti-tech attitudes is high, and that the concentration of respondents with a strong opposition to technology is especially visible among these sets of respondents (top three rows of figure 4). Among people who identify as liberal, who do not feel lonely, or who are skeptical of general conspiratorial narratives, the average level of anti-technology attitudes is low (with the median ranging from -0.52 to -0.18), and the distribution around the median point is more symmetric.

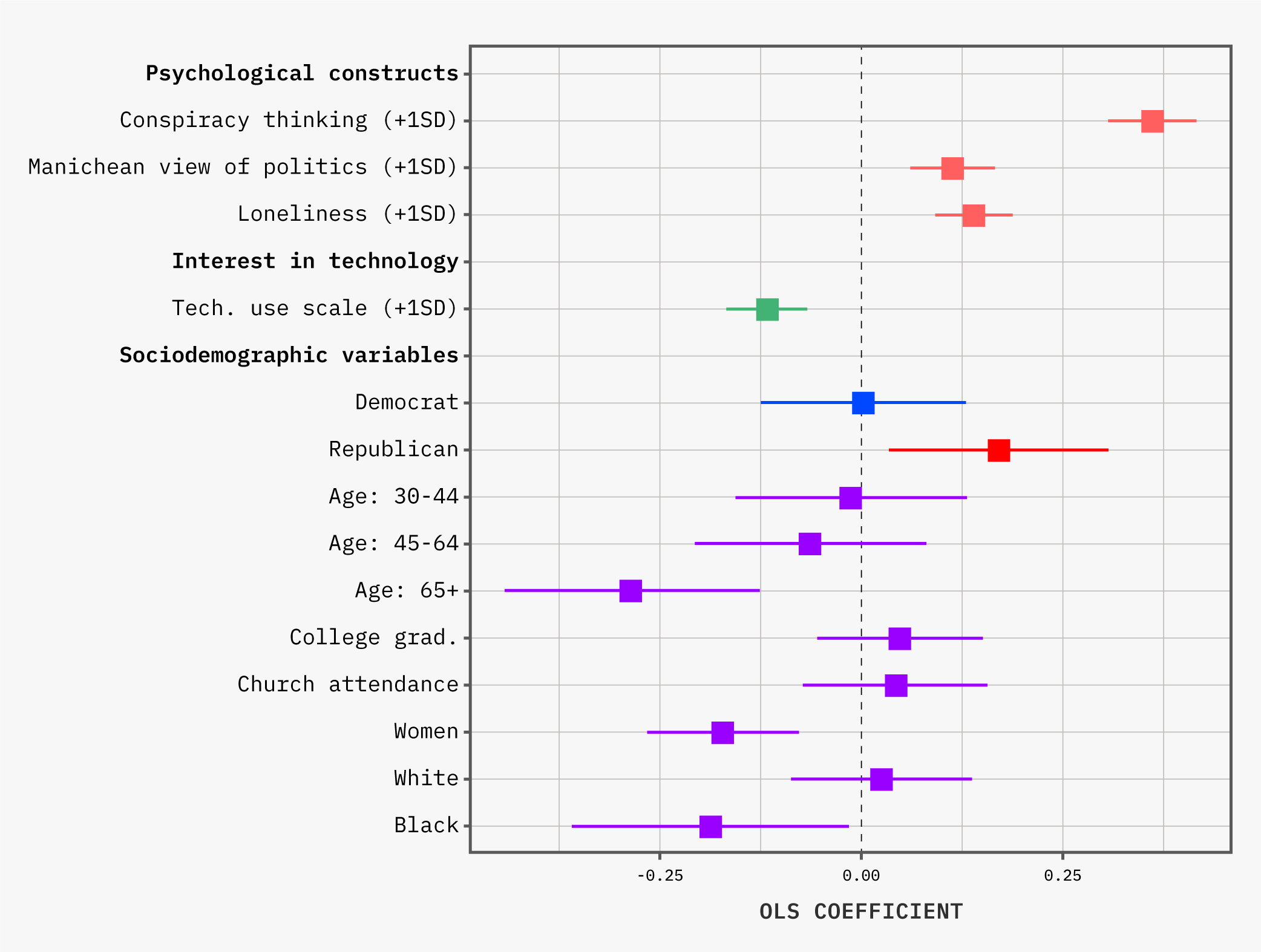

But are the (unadjusted) differences identified in the preceding two figures large and statistically significant after accounting for potential confounders? To get a more accurate sense of the predictive power of various potential drivers of anti-tech attitudes, we estimate a regression with the YouGov data in figure 5 (and also other psychological predictors in figure S.5, using the Lucid data). We see that anti-establishment views and loneliness (plus a need for chaos and a need for uniqueness in the Lucid survey) are positively associated anti-tech attitudes.

Conditioning on partisanship, age, education, race, gender, and church attendance (as well as loneliness and frequency of using modern technology), we see that a one standard deviation increase in conspiracism is associated with a 0.36 SD increase in anti-tech attitudes. The association between loneliness and anti-tech attitudes is smaller but still positive (a 0.14 SD increase in anti-tech attitudes is predicted for each 1 SD increase in loneliness). We also find that the partisan gap shrinks after adjusting for covariates (relative to the raw differences reported in figure 3), that older respondents are less skeptical of technology (even controlling for relevant covariates), and that more frequent use of modern tools and innovations is associated with less negative attitudes toward technology.

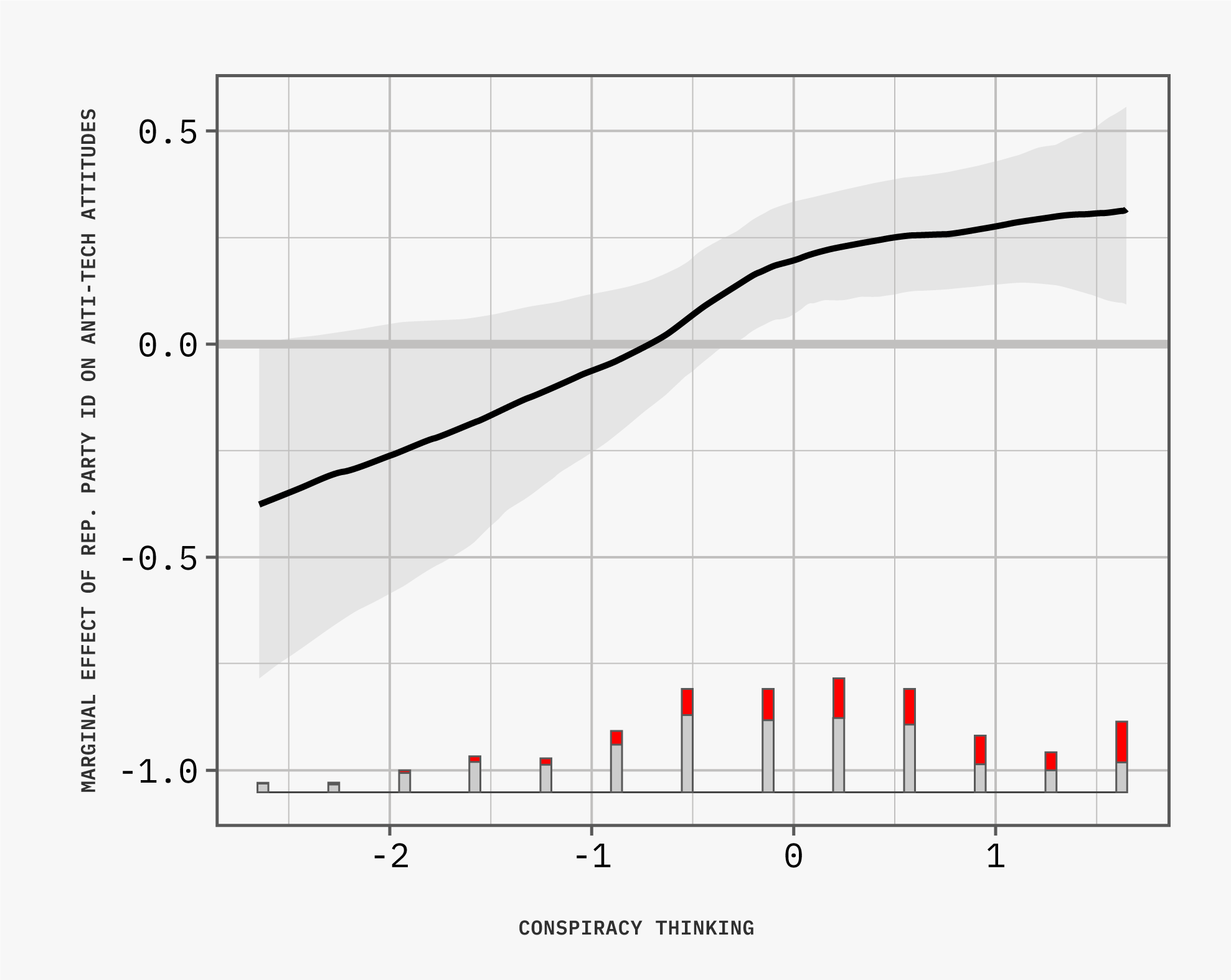

Next, we examine whether the size and direction of the positive coefficient on “Republican Party ID” (which some may view as a proxy for conservatism) change across the full range of values for conspiracism. As we saw, conspiracism is the best predictor of anti-tech attitudes, but does it matter differentially for Democratic versus Republican identifiers? To test this possibility, we interact partisanship with the level of conspiracy mindset, and otherwise maintain the same specification as the one displayed in figure 5. The inclusion of an interaction term allows us to assess whether the positive association between Republican Party ID and anti-tech attitudes is stable across levels of conspiracism, and we use the kernel regression approach proposed by Hainmueller, Mummolo, and Xu, which allows us to relax the assumption that the interaction between our two variables is linear.35 The results from this procedure are displayed in figure 6: we see that for the lower levels of conspiracy thinking, Republicans are no different in their anti-tech dispositions than independents. However, if a respondent exhibits average or above-average levels of conspiracism, then Republican Party ID is prognostic of elevated anti-tech views.

Study 2: Policy Preferences, Openness to

Innovations, and Extremist Behavior

Moving forward in our analysis, we focus on the relationship between anti-tech attitudes and policy preferences. We estimate two distinct models, setting the dependent variable to 1 if a respondent expresses support for breaking up Big Tech (model 1), or when a respondent agrees with the statement that the government should heavily regulate AI (model 2). Through these models, our aim is to uncover if, and to what extent, anti-tech attitudes influence public policy stances, especially when accounting for other potentially influential factors like political affiliation. We control for partisanship and other suspected confounding variables, ensuring (albeit imperfectly) that the observed associations between anti-tech attitudes and policy preferences are not merely artifacts of underlying political beliefs or unobserved factors like demographics, exposure to technology through frequent use, or certain psychological attributes and predispositions.

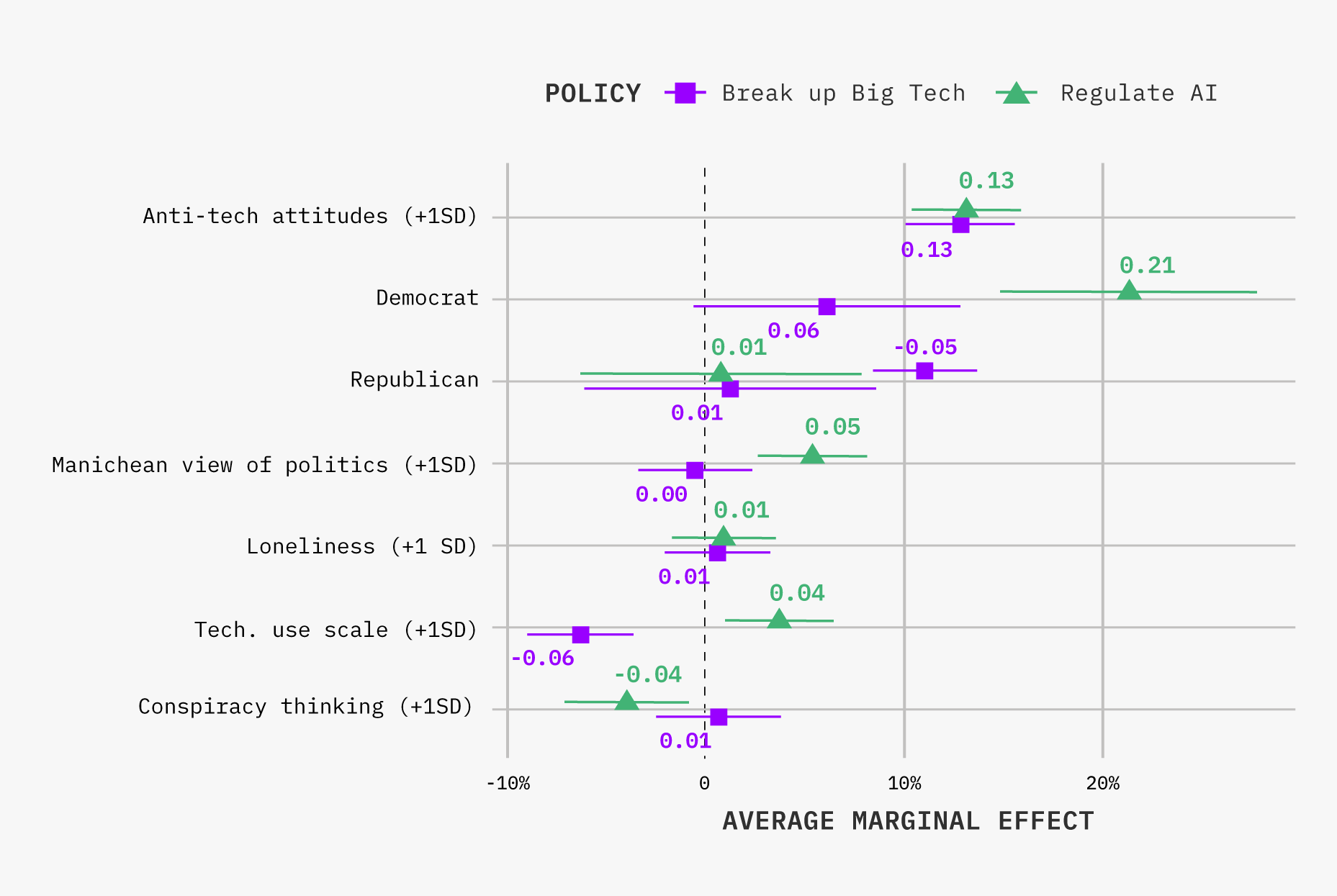

We see that a counterfactual one standard deviation increase in anti-tech attitudes is associated with an increase of 12.9 percentage points in support of breaking up Big Tech, whereas partisanship, anti-establishment orientation, and loneliness are not correlated with this policy preference. These and remaining conditional average marginal effects are displayed in figure 7.

The relationship between anti-tech attitudes and supporting regulation of AI is also positive and significant: we see an increase of 13.2 percentage points in the predicted probability of supporting regulation for a 1 SD increase in anti-tech attitudes, conditioning on demographics, partisanship, conspiracy thinking, loneliness, and the frequency of using technologies such as ChatGPT, smartwatches, smart home assistants, or social media. For this outcome variable, we see that Democrats are more supportive of regulation than independents, and that people who interact with the latest technology are not opposed to regulation (in fact, they are slightly more likely to support regulation of AI).

Setting the anti-tech attitudes to their maximum and keeping other covariates at their observed values for all respondents would yield an average predicted probability of regulation support of 84.7 percent. The same counterfactual exercise with anti-tech attitudes being set to their minimum value gives us an average predicted probability for 12.9 percent. That is, a hypothetical min-to-max. movement in anti-tech attitudes is estimated to produce an effect of 71.8 percentage points, which can be viewed as a massive effect.36

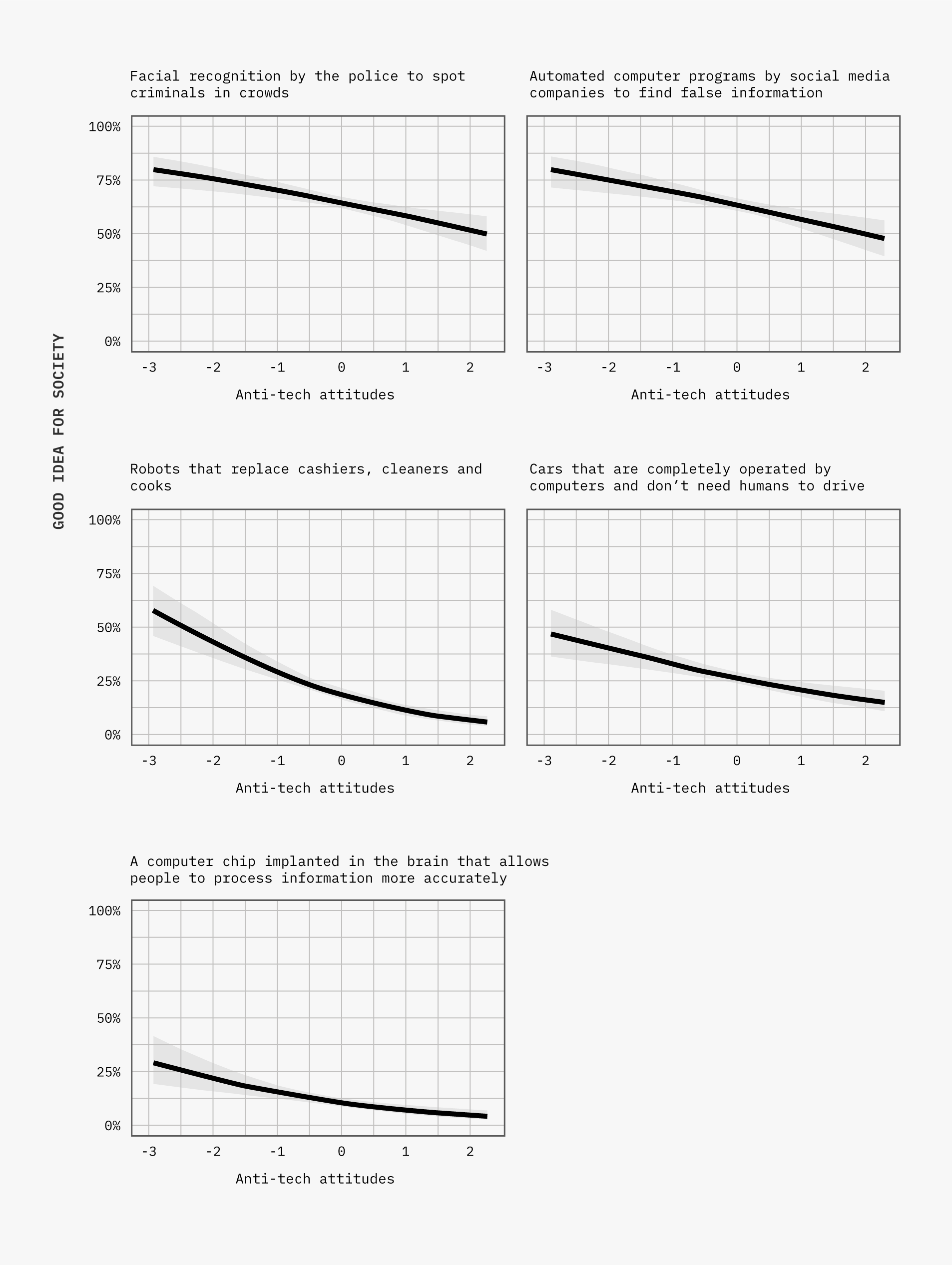

Evaluations of Specific Technologies

Figure 8 presents predicted evaluations of five emerging technologies, capturing whether respondents view them as beneficial or detrimental to society. These predictions are based on logistic regression models that again control for key observables including partisanship, loneliness, conspiracism, Manichean worldview, technology interest and use, and demographics. The x-axis represents the full range of anti-tech attitudes, while the y-axis shows the probability of supporting each technology. Across all five technologies, we observe a consistent negative relationship between anti-tech attitudes and support for technological adoption. However, the strength of this relationship and the baseline level of support vary considerably across technologies.

Facial recognition for police use and automated misinformation detection by social media companies stand out as the most widely accepted technologies. Even among respondents with strong (top quartile) anti-tech attitudes, support for these technologies registered above 50 percent. For those with the most pro-tech attitudes, support exceeded 75 percent for both facial recognition and automated misinformation detection.

In contrast, the other three technologies— self-driving cars, brain chip implants, and robots replacing service workers—face much more skepticism. The steepest decline in support relative to anti-tech attitudes is observed for robots replacing cashiers, cleaners, and cooks. While individuals with the most pro-tech attitudes show about 50 percent support for this technology, this drops to nearly zero for those with the strongest anti-tech sentiments. Self-driving cars and brain chip implants for enhanced information processing face considerable skepticism in general. Notably, even among the most pro-tech respondents, the predicted probability of supporting self-driving cars barely approaches 50 percent. For those with strong anti-tech attitudes, support drops to around 15 percent or less.

Interestingly, the results suggest a divide between technologies that might be perceived as enhancing public safety or combating misinformation (facial recognition and automated fact-checking) and those that more directly replace human capabilities or alter the human body (service robots, self-driving cars, and brain implants). This divide persists across the spectrum of anti-tech attitudes, potentially indicating broader societal concerns about job displacement and bodily autonomy that transcend general attitudes toward technology. These findings highlight the nuanced nature of public opinion on emerging technologies: while general anti-tech attitudes are a strong predictor of opposition to new technologies, the baseline level of acceptance varies significantly depending on the specific application and its perceived societal impact.

Approval of Politically Motivated Violence

Finally, we summarize our results from the firebomb vignette. We note that sympathy with the violent act was surprisingly high: 49 percent of respondents in dataset 1 agreed with “what the man said,” and 21 percent somewhat or strongly supported the action.37 A neutral option was provided when respondents were asked “Regardless of whether you agree or disagree with the man’s actions, how much do you agree or disagree with what the man said?” (five-point SA–SD scale, which has been dichotomized of the analysis that follows), but we forced respondents to take a stand on the man’s actions by using only a four-point scale (strongly support to stronly oppose) with no neutral option after asking “How much do you support or oppose the man’s actions?” We find that the probability of agreement with the sentiment of the letter (expressing the view that tech CEOs “just want to make money” and do not care if their products “divide our country, and hurt our children”) is 19 percentage points higher for each 1 SD increase in anti-tech attitudes (figure 9). The probability of agreement with the violent act itself does not rise with anti-tech attitudes, but it correlates positively with a need for chaos. Surprisingly, however, the need for chaos does not correlate with the weaker type of support (agreeing with the letter) in the expected direction.

Study 3: Persuasiveness of Arguments about AI

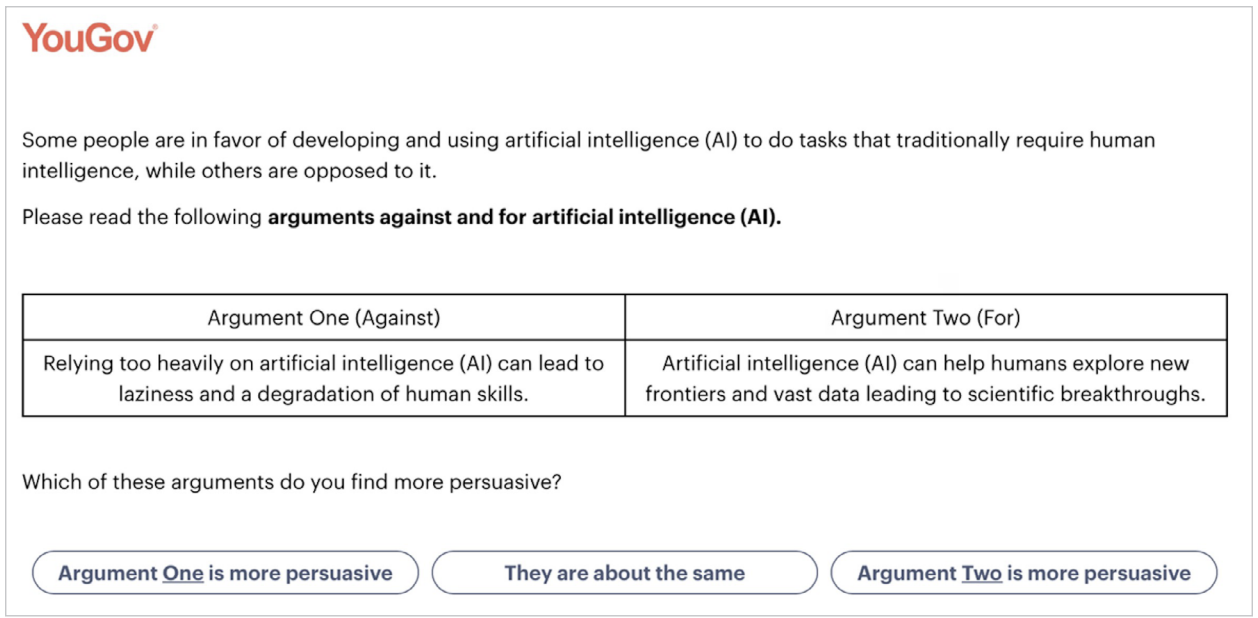

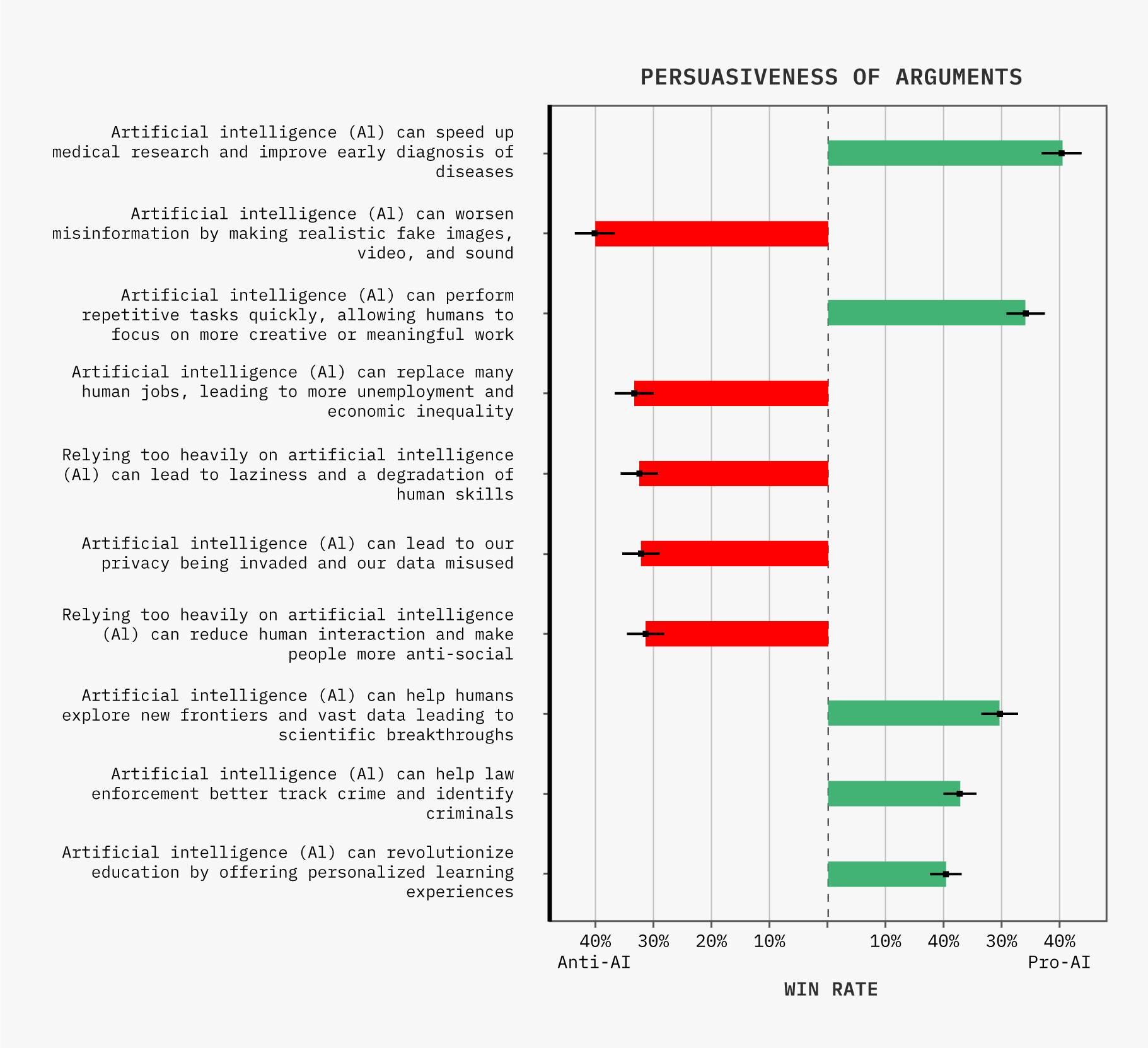

Studies 1 and 2 focus on the construction and measurement of anti-tech orientation. But a separate question is whether individuals can be persuaded about technology: are anti-tech perceptions fixed, or are there certain types of arguments that push the public to be more or less receptive? These questions are of particular import, since recent research finds that even amongst experts there are large disagreements over the risks of future technology like AI.38 In this study, we focus on arguments for and against AI, a relatively new technology. Each of our YouGov respondents was asked to evaluate three pairs of arguments about artificial intelligence. A sample screenshot is shown in figure 10 (we followed the approach proposed by Blumenau and Lauderdale).39

Our dataset contains 8,100 judgments (from 1,350 US respondents) about ten arguments (five pro-AI and five against AI). Participants were randomly shown two arguments—one that argued in favor of AI and the other argued against it. They then indicated which of the two (randomly paired) arguments they considered more persuasive, or whether they considered them to be equally persuasive. All arguments that a respondent could potentially evaluate are listed and ranked by their raw win rate in figure 11. We see that, on average, arguments against adopting AI were slightly more persuasive than pro-AI arguments. However, the most successful argument of all was a pro-AI argument that said, “AI can speed up medical research and improve early diagnosis of diseases.”

A separate analysis is provided in the SI (figure S.11), where we estimate the strengths of arguments while controlling for the relative strengths of the counter-arguments they were facing, using a Bradley-Terry model. The results are substantively unchanged: the top three and the bottom three strongest or weakest arguments respectively are the same (and there are small movements in the relative rankings of the arguments of medium strength).

The conclusion from this experimental study of persuasiveness is that people remain skeptical about new technologies like AI. But because these innovations are new (and in some cases hypothetical), we also show that citizens are somewhat persuadable. Emphasis on the positive medical benefits or replacement of repetitive tasks makes people more amenable to AI. But concerns about misinformation, replacement of human jobs, and more general degradation of human capacity makes people more skeptical.

Conclusion

We fielded a pair of surveys and a survey experiment on persuasiveness to measure Americans’ attitudes toward several key questions related to technology. For instance, do Americans attribute certain societal problems, such divisive politics, feelings of loneliness, and the propagation of hate and misinformation to the influence of social media platforms? Do citizens feel threatened by the coming AI revolution? These questions are particularly important as AI technologies may increasingly become part of people’s daily lives. Moving beyond specific domains, is there a general anti-tech orientation? Aggregating our survey instruments to quantify broader anti-technology sentiments, we found that the first factor that emerged corresponded to a general, critical attitude toward modern technology (not limited to social media apps).

Our findings provide several insights about the nature and implications of anti-technology attitudes in the United States. We find that anti-tech sentiment is a coherent orientation, distinct from but related to other psychological and political factors. This orientation strongly predicts policy preferences and, in some cases, support for extreme actions. We identified which segments of the population were most likely to score high on our anti-tech orientation, finding that loneliness, conspiracy thinking, need for uniqueness, and need for chaos correlate with the general anti-technology factor (these relationships hold after controlling for partisanship and education). Finally, beyond personal dispositions, we show that anti-tech sentiments shape respondents’ policy preferences, especially concerning the governance and regulation of technology. The paper also documents how anti-tech attitudes might breed acceptance or even endorsement of extremist actions, including support for violence against tech businesses or technological entities (or their proponents), establishing that there is a clear association between anti-tech beliefs and supporting justifications of violent actions against the leaders of tech companies. Finally, we show that the public can (currently) be persuaded by both pro- and anti-AI arguments, although anti-AI arguments were on average deemed more persuasive.

With these results in mind, what are the implications for theory, policy, and future research? Anti-tech attitudes represent a coherent orientation that is not purely explained by partisanship, suggesting that its roots may be more psychological than ideological. Given increasing criticisms of technology companies by certain politicians and elites, and given attempts to regulate social media and AI companies, anti-tech sentiment will likely remain a politically salient issue, possibly cutting across traditional party lines.40 This growing salience has led to increased scholarly attention on the role of technology companies in governance and society.

For instance, Cupać, Schopmans, and Tuncer-Ebetürk have cautioned that “technology corporations have emerged as a new quasi-governing class that holds political power without democratic legitimacy,” and Culpepper and Thelen have said that “[f]irms with platform power benefit from a deference from policymakers.”41 The noted decrease in state power extends to labor power as well; using perhaps deliberately loaded and provocative language, the dependence of some workers on digital platforms has been described as techno-feudalism, a concept related to concerns about “platformization” and surveillance.42 Furthermore, as other research has shown, entrepreneurs in the technology sector and other elites that are pro-technology and cheering the arrival of AI have very different preferences and attitudes than other citizens.43

Concerns about technology are likely to grow if deepfakes, misinformation, and AI-generated ads play increasing roles in political campaigns.44 Policymakers and technology companies would do well to place guardrails on such use. That said, perceptions among both citizens and regulators about the effects of digital media on democracy may not be in line with the existing available research. As Budak et al. note, “sweeping claims about the effects of exposure to false content online … are inconsistent with much of the current empirical evidence.”45 This discrepancy between public perception and scientific evidence highlights the need for continued research and effective communication of scientific findings to the public and policymakers.

To the extent that dangers of digital media or new apps may be exaggerated (or the benefits underappreciated) by citizens, it is possible that informational interventions could correct misperceptions or that intuition about fairness (e.g., fairness of automation) could be adjusted via framing.46 And, as our pairwise experiment suggests, supporters of new technology may wish to emphasize and explore the positive arguments for AI—for example, that AI can improve medical care and disease diagnoses—while addressing negative concerns of the public, namely increased misinformation and job loss due to automation. While these strategic communication approaches may help shape public opinion, it is crucial to ground such efforts in a thorough understanding of existing anti-technology sentiments and their implications. At the same time, the welfare effects of new technologies are a subject of continued study, and future work needs to grapple with both direct and subtle network effects and consumption spillovers (e.g., users demand payment to stop using apps like Instagram and TikTok, but they would simultaneously be willing to pay for an outcome where everyone de-activated these apps, according to incentivized experiments by Bursztyn et al.).47

As e-commerce, ride-sharing, online dating, cryptocurrency trading, the Internet of things (network of physical objects embedded with sensors), ubiquitous computing, applications of AI, and other technologies continue to emerge and reshape the economy, anti-tech sentiment will play an increasing role in politics.48 How politicians seek to mobilize supporters with anti-tech appeals, and whether it begins to emerge as a partisan issue remain important future questions; but our study advances our understanding of the pre-existing reservoir of anti-tech sentiment by providing a comprehensive examination of anti-tech attitudes, their correlates, and their potential consequences. We have shown that anti-tech sentiment is a coherent orientation with significant implications for policy preferences and potential support for contentious actions. Future work should continue to explore the evolution of anti-tech attitudes over time, these attitudes’ relationship to emerging technologies, and their impact on political behavior and policy outcomes.

You can find the Supporting Information for this research here.

1 Evgeny Morozov (@evgenymorozov), “‘Democratizing’ AI is like ‘democratizing’ a tank,” X (formerly Twitter), July 24, 2024, 2:50 a.m., https://x.com/evgenymorozov/status/1816003117776830677.

2 Jonathan Haidt (@JonHaidt), “Having AI servants will make everything easier for adults,” X (formerly Twitter), May 12, 2024, 8:18 a.m., https://twitter.com/JonHaidt/status/1789631328553472509.

3 Amy Orben, “The Sisyphean Cycle of Technology Panics,” Perspectives on Psychological Science 15, no. 5 (2020): 1143–57.

4 J. M. Twenge, “Increases in Depression, Self-harm, and Suicide among US Adolescents after 2012 and Links to Technology Use: Possible Mechanisms,” Psychiatric Research and Clinical Practice 2, no. 1 (2020): 19–25.

5 Nina Schick, Deepfakes: The Coming Infocalypse (New York: Grand Central Publishing, 2020); Daron Acemoglu and Simon Johnson, Power and Progress: Our Thousand-Year Struggle over Technology and Prosperity (London: Hachette UK, 2023); Daron Acemoglu, Simon Johnson, and Austin Lentsch, “The Hollywood Writers’ AI Fight Is Everyone’s Fight,” Project Syndicate, August 2, 2023, https://www.project-syndicate.org/commentary/ai-wga-writers-strike-future-of-knowledge-work-by-daron-acemoglu-et-al-2023-08; Ian Bremmer, “How the World Must Respond to the AI Revolution,” TIME, May 31, 2023, https://time.com/6283716/world-must-respond-to-the-ai-revolution/.

6 Lee Rainie et al., AI and Human Enhancement: Americans’ Openness Is Tempered by a Range of Concerns (Washington, DC: Pew Research Center, March 2022), 6; Daniel Colson, “Poll Shows Overwhelming Concern about Risks From AI as New Institute Launches to Understand Public Opinion and Advocate for Responsible AI Policies,” Artificial Intelligence Policy Institute, August 11, 2023, https://theaipi.org/poll-shows-overwhelming-concern-about-risks-from-ai-as-new-institute-launches-to-understand-public-opinion-and-advocate-for-responsible-ai-policies/ (see full toplines, page 3, item 8).

7 Shoshana Zuboff, The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power (London: Profile Books, 2018); Jamie Bartlett, The People vs. Tech: How the Internet Is Killing Democracy (And How We Save It) (New York: Random House, 2018); Schick, Deepfakes; Jodi Dean, “The Neofeudalising Tendency of Communicative Capitalism,” tripleC: Communication, Capitalism, & Critique 22, no. 1 (2024): 197–207; Bremmer, “How the World Must Respond.

8 Kaczynski’s nearly twenty-year bombing run killed three people and injured more than twenty. When in 1995 the Washington Post and the New York Times published his manifesto—under threat—it was largely dismissed as “ramblings” of “a hermit.” Peter H. King, “Unabomber Manifesto Revisited,” Los Angeles Times, April 10, 1996, https://www.latimes.com/archives/la-xpm-1996-04-10-mn-56869-story.html. But as the years went by, and as social media and tech companies became increasingly central to the economy and people’s everyday lives, “Uncle Ted,” as he was known to his admirers, and his manifesto found new resonance among those on both the left and right. John H. Richardson, “Children of Ted: Two Decades after His Last Deadly Act of Ecoterrorism, the Unabomber has Become an Unlikely Prophet to a New Generation of Acolytes,” New York Magazine, December 11, 2018, https://nymag.com/intelligencer/2018/12/the-unabomber-ted-kaczynski-new-generation-of-acolytes.html. Elon Musk said that Kaczynski “might not have been wrong” and Tucker Carlson called him a “bad person, but smart.” Michael Safi, “‘His Ideas Resonate’: How the Unabomber’s Dangerous Anti-tech Manifesto Lives On,” The Guardian, June 19, 2023, https://www.theguardian.com/us-news/2023/jun/19/unabomber-ted-kaczynski-dangerous-anti-tech-manifesto-lives-on.

9 J. M. Twenge et al., “Increases in Depressive Symptoms, Suicide-Related Outcomes, and Suicide Rates among US Adolescents after 2010 and Links to Increased New Media Screen Time,” Clinical Psychological Science 6, no. 1 (2018): 3–17; Twenge, “Increases in Depression;” Jonathan Haidt, “A Guilty Verdict,” Nature 578 (2020): 226–27; Jonathan Haidt, The Anxious Generation: How the Great Rewiring of Childhood Is Causing an Epidemic of Mental Illness (New York: Random House, 2024); Jim Waterson and Dan Milmo, “Facebook Whistleblower Frances Haugen Calls for Urgent External Regulation,” The Guardian, October 25, 2021, https://www.theguardian.com/technology/2021/oct/25/facebook-whistleblower-frances-haugen-calls-for-urgent-external-regulation.

10 Janna Anderson and Lee Rainie, Many Tech Experts Say Digital Disruption Will Hurt Democracy (Washington, DC: Pew Research Center, February 2020).

11 Sean Kates, Jonathan Ladd, and Joshua A. Tucker, How Americans’ Confidence in Technology Firms Has Dropped: Evidence from the Second Wave of the American Institutional Confidence Poll (Washington, DC: Brookings Institution, June 2023).

12 S. Altay et al., “A Survey of Expert Views on Misinformation: Definitions, Determinants, Solutions, and Future of the Field,” Harvard Kennedy School Misinformation Review 4, no. 4 (2023): 1–34. Some recent work has argued that the impact of digital media on recipients of false or misleading content has been overstated. Sacha Altay, Manon Berriche, and Alberto Acerbi, “Misinformation on Misinformation: Conceptual and Methodological Challenges,” Social Media + Society 9, no. 1 (2023); Ceren Budak et al., “Misunderstanding the Harms of Online Misinformation,” Nature 630, no. 8015 (2024): 45–53; Jan Zilinsky et al., “Justifying an Invasion: When Is Disinformation Successful?,” Political Communication, May 2024,1–22.

13 Astrid Schepman and Paul Rodway, “The General Attitudes toward Artificial Intelligence Scale (GAAIS): Confirmatory Validation and Associations with Personality, Corporate Distrust, and General Trust,” International Journal of Human-Computer Interaction 39, no. 13 (2023): 2724–41; German Neubaum and Nicole C. Krämer, “Monitoring the Opinion of the Crowd: Psychological Mechanisms Underlying Public Opinion Perceptions on Social Media,” Media Psychology 20, no. 3 (2017): 502–31; M. Laeeq Khan, “Social Media Engagement: What Motivates User Participation and Consumption on YouTube?,” Computers in Human Behavior 66 (2017): 236–47; K. C. Yam et al., “When Your Boss Is a Robot: Workers Are More Spiteful to Robot Supervisors that Seem More Human,” Journal of Experimental Social Psychology 102 (June 2022): 1–12; Baobao Zhang and Allan Dafoe, “Artificial Intelligence: American Attitudes and Trends” (working paper, SSRN,January 2019); Markus Blut and Cheng Wang, “Technology Readiness: A Meta-analysis of Conceptualizations of the Construct and Its Impact on Technology Usage,” Journal of the Academy of Marketing Science 48 (2020): 649–69.

14 Matthew J. Hornsey, Emily A. Harris, and Kelly S. Fielding, “The Psychological Roots of Anti-Vaccination Attitudes: A 24-Nation Investigation,” Health Psychology 37, no. 4 (2018): 307; Adrian Furnham, “Are Modern Health Worries, Personality and Attitudes to Science Associated with the Use of Complementary and Alternative Medicine?,” British Journal of Health Psychology 12, no. 2 (2007): 229–43; Matthew Motta and Timothy Callaghan, “The Pervasiveness and Policy Consequences of Medical Folk Wisdom in the US,” Scientific Reports 10 (July 2020).

15 Rani Molla, “Poll: Most Americans Want to Break up Big Tech,” Vox, January 26, 2021, https://www.vox.com/2021/1/26/22241053/antitrust-google-facebook-break-up-big-techmonopoly; Monica Anderson, Americans’ Views of Technology Companies (Washington, DC: Pew Research Center, April 2024).

16 Douglas Gollin, Casper Worm Hansen, and Asger Mose Wingender, “Two Blades of Grass: The Impact of the Green Revolution,” Journal of Political Economy 129, no. 8 (2021): 2344–84; Jonas Hjort and Jonas Poulsen, “The Arrival of Fast Internet and Employment in Africa,” American Economic Review 109, no. 3 (2019): 1032–79.

17 Seymour Martin Lipset, “Some Social Requisites of Democracy: Economic Development and Political Legitimacy,” American Political Science Review 53, no. 1 (1959): 69–105; Adam Przeworski and Fernando Limongi, “Modernization: Theories and Facts,” World Politics 49, no. 2 (1997): 155–83.

18 Emile Durkheim, The Division of Labour in Society, trans. W. D. Halls (New York: Free Press, 1997); Herbert Marcuse, One-Dimensional Man: Studies in the Ideology of Advanced Industrial Society (Boston: Beacon Press, 1964); Robert D. Putnam, Bowling Alone: The Collapse and Revival of American Community (New York: Simon and Schuster, 2000).

19 Larry D. Rosen et al., “The Media and Technology Usage and Attitudes Scale: An Empirical Investigation,” Computers in Human behavior 29, no. 6 (2013): 2501–11; Sophie Borwein et al., “Perceived Technological Threat and Vote Choice: Evidence from 15 European Democracies,” West European Politics,February 2023, 1–28; Baobao Zhang, “No Rage Against the Machines: Threat of Automation Does Not Change Policy Preferences,” Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, July 2022, 856–66.

20 Richard Baldwin, The Globotics Upheaval: Globalization, Robotics, and the Future of Work (Oxford: Oxford University Press, 2019); Durkheim, Division of Labour.

21 Marcuse, One-Dimensional Man.

22 Jaron Lanier, Who Owns the Future? (New York: Simon and Schuster, 2014); Tim Wu, The Attention Merchants: The Epic Scramble to Get Inside Our Heads (New York: Vintage Books, 2017).

23 Acemoglu and Johnson, Power and Progress.

24 Jerry Mander, Four Arguments for the Elimination of Television (New York: Quill, 1978).

25 Ibid.

26 Sherry Turkle, Alone Together: Why We Expect More from Technology and Less from Each Other (New York: Basic Books, 2011); Jean M. Twenge et al., “Underestimating Digital Media Harm,” Nature Human Behaviour 4, no. 4 (2020): 346–48.

27 Evgeny Morozov, The Net Delusion: The Dark Side of Internet Freedom (New York: PublicAffairs, 2012); Helen Margetts, “Rethinking Democracy with Social Media,” Political Quarterly 90, no. S1 (2018); Joshua A. Tucker et al., “From Liberation to Turmoil: Social Media and Democracy,” Journal of Democracy 28, no. 4 (October 2017): 46–59.

28 Ada W. Finifter, “Dimensions of Political Alienation,” American Political Science Review 64, no. 2 (1970): 389–410; A. Langenkamp, “Lonely Hearts, Empty Booths? The Relationship between Loneliness, Reported Voting Behavior and Voting as Civic Duty,” Social Science Quarterly 102, no. 4 (2021): 1239–54; Leo Srole, “Social Integration and Certain Corollaries: An Exploratory Study,” American Sociological Review 21, no. 6 (1956): 709–16.

29 J. E. Uscinski et al., “American Politics in Two Dimensions: Partisan and Ideological Identities versus Anti-establishment Orientations,” American Journal of Political Science 65, no. 4 (2021): 877–95.

30 Miles T. Armaly and Adam M. Enders, “Who Supports Political Violence?,” Perspectives on Politics 22, no. 2 (2024): 427–44; Kevin Arceneaux et al., “Some People Just Want to Watch the World Burn: The Prevalence, Psychology, and Politics of the ‘Need for Chaos,’” Philosophical Transactions of the Royal Society B 376, no. 1822 (April 2021); Joseph E. Uscinski and Adam M. Enders, Conspiracy Theories: A Primer (Lanham, MD: Rowman & Littlefield, 2023); Adam M. Enders et al., “On Modeling the Correlates of Conspiracy Thinking,” Scientific Reports 13, no. 8325 (May 2023).

31 Sophie Borwein et al., “Perceived Technological Threat;” Carl Benedikt Frey, Thor Berger, and Chinchih Chen, “Political Machinery: Did Robots Swing the 2016 US Presidential Election?,” Oxford Review of Economic Policy 34, no. 3 (2018): 418–42; Zhang, “No Rage.”

32 Stephanie A. Bell and Anton Korinek, “AI’s Economic Peril,” Journal of Democracy 34, no. 4 (2023): 151–61.

33 Respondents recruited via Lucid who failed the initial screener questions were not allowed to continue taking the survey, and we dropped participants who completed the survey in fewer than 180 seconds. However, we typically kept those respondents who did not pass an attention check that was embedded in the middle of the survey.

34 For example, the first survey included measures of respondents’ need for uniqueness and their need for chaos, which we decided to omit from the YouGov survey due to space constraints. For more information on the need for chaos scale, see Arceneaux et al., “Some People.”

35 Jens Hainmueller, Jonathan Mummolo, and Yiqing Xu, “How Much Should We Trust Estimates from Multiplicative Interaction Models? Simple Tools to Improve Empirical Practice,” Political Analysis 27, no. 2 (2019): 163–92.

36 For breaking up Big Tech, the corresponding average predictions are 81.6 percent at the maximum value of anti-tech attitudes, and 12.1 percent at the minimum level of anti-tech attitudes.

37 These numbers may overestimate support for violence, perhaps due to trolling, acquiescence, or other types of bias and measurement error; but even if these proportions are inflated, they still seem non-trivial.

38 Katja Grace et al., “When Will AI Exceed Human Performance? Evidence from AI Experts,” Journal of Artificial Intelligence Research 62 (2018): 729–54; Josh Rosenberg et al., Roots of Disagreement on AI Risk (Forecasting Research Institute, 2023). Also relevant in this context is a recent Pew Research Center analysis: Rainie et al. report that opposition to a given technology hinges on the stated reason why a given tool might be used. They note that “[b]rain chip implants are currently in use and development for a variety of purposes, apart from their potential use to enhance how people process information … [and a] large share of Americans (77%) say they would favor the use of computer implants in the brain to allow increased movement for people who are paralyzed … [but] Americans are far less supportive of other uses of these devices, where there is no clear therapeutic benefit.” Rainie et al., AI and Human Enhancement, 94.

39 Jack Blumenau and Benjamin E. Lauderdale, “The Variable Persuasiveness of Political Rhetoric,” American Journal of Political Science 68, no. 1 (2024): 255–70. We wanted to give each respondent an opportunity to indicate whether a particular claim was considered valid or persuasive, even if a respondent already held a particular stance. For example, a person might oppose adoption of AI in general, and would always answer a policy question negatively, but she might be willing to acknowledge that some pro-AI arguments are good or persuasive, without being required to take a direct or explicit pro-AI stance. In other words, like Blumenau and Lauderdale, we asked respondents to indicate which of two arguments they viewed as better.

40 Robert Gorwa, The Politics of Platform Regulation: How Governments Shape Online Content Moderation (Oxford: Oxford University Press, 2024).

41 Jelena Cupać, Hendrik Schopmans, and Irem Tuncer-Ebetürk, “Democratization in the Age of Artificial Intelligence: Introduction to the Special Issue,” Democratization 31, no. 5 (2024): 899–921; Pepper D. Culpepper and Kathleen Thelen, “Are We All Amazon Primed? Consumers and the Politics of Platform Power,” Comparative Political Studies 53, no. 2 (2020): 288–318.

42 Yanis Varoufakis, Technofeudalism: What Killed Capitalism (London: The Bodley Head, 2023); Dean, “Neofeudalising;” Zuboff, Age of Surveillance Capitalism.

43 David E. Broockman, Gregory Ferenstein, and Neil Malhotra, “Predispositions and the Political Behavior of American Economic Elites: Evidence from Technology Entrepreneurs,” American Journal of Political Science 63, no. 1 (2019): 212–33.

44 Rumman Chowdhury, “AI-fuelled Election Campaigns Are Here—Where Are the Rules?,” Nature 628, no. 8007 (2024): 237; Ali Swenson and Christine Fernando, “As Social Media Guardrails Fade and AI Deepfakes Go Mainstream, Experts Warn of Impact on Elections,” PBS, December 27, 2023, https://www.pbs.org/newshour/politics/as-social-media-guardrails-fade-and-ai-deepfakes-go-mainstream-experts-warn-of-impact-on-elections.

45 Budak et al., “Misunderstanding.”

46 Ian G. Anson, “Epistemic Confidence Conditions the Effectiveness of Corrective Cues against Political Misperceptions,” Research & Politics 9, no. 2 (2022); Ethan Porter and Thomas J. Wood, False Alarm (Cambridge: Cambridge University Press, 2019); Beatrice Magistro et al., “Attitudes toward Automation and the Demand for Policies Addressing Job Loss: The Effects of Information about Trade-offs,” Political Science Research and Methods, February 2024, 1–24.

47 Leonardo Bursztyn et al., “When Product Markets Become Collective Traps: The Case of Social Media” (working paper 31771, National Bureau of Economic Research, Cambridge, MA, October2023).

48 Haidt, Anxious Generation; Jaron Lanier, Ten Arguments for Deleting Your Social Media Accounts Right Now (New York: Random House, 2018). At the same time, social norms, personal preferences, and rules in private organizations such as schools are bound to also evolve as the downsides of recent technology become more salient, and these developments could mitigate technology-related resentments.