Testimony Before the House Committee on Oversight and Government Reform Subcommittee on Economic Growth, Energy Policy, and Regulatory Affairs

Hearing: America’s AI Moonshot: The Economics of AI, Data Centers, and Power Consumption

Thank you, Subcommittee Chairman Burlison, Subcommittee Ranking Member Frost, and subcommittee members for having me here today to talk about how to maximize the U.S. opportunity in artificial intelligence. I am Neil Chilson, the Head of AI Policy at the Abundance Institute.

The Abundance Institute is a mission-driven nonprofit dedicated to creating the cultural and policy environment necessary for emerging technologies to germinate, develop, and thrive, and to thereby perpetually expand widespread human prosperity and abundance.

The current wave of advanced computing technologies, commonly referred to as “artificial intelligence,” has the potential to create enormous economic growth and human flourishing across the United States. The U.S. private sector leads the world in innovation, investment, and deployment of AI technologies. But realizing this technology’s immense opportunity requires the government to tackle some important challenges facing both AI innovators and the energy infrastructure providers on which they rely. For the rocket ship of the U.S. private sector to lift us into the AI future, Congress must build the right launchpad.

The Importance of an AI Moonshot

AI will drive unprecedented productivity gains and broad economic expansion. While experts cannot precisely estimate the economic impact of this rapidly improving general purpose technology, most agree the magnitude is large.1 Technology industry analyst firm International Data Corporation estimates that AI will have a “cumulative global economic impact of $19.9 trillion through 2030 and drive 3.5% of global GDP in 2030.”2 Even though adoption and integration of this technology into industry is early and ongoing, research already demonstrates strong worker productivity gains. According to a 2023 Stanford overview, “numerous studies rigorously examined AI’s productivity impacts, offering more conclusive evidence” that AI enhances worker productivity.3 For example, one study showed users of AI “completed tasks in 26% to 73% less time than their counterparts without AI access” depending on the types of tasks.4 A Harvard Business School study demonstrated that employees with GPT-4 access “increased their productivity on a selection of consulting tasks by 12.2%, speed by 25.1%, and quality by 40.0%.”5 AI also shows promise in enhancing the work of human teams, resulting in faster, better work and happier team members.6 As industries integrate AI, researchers expect reinvigorated productivity growth – leading to higher output, improved wages, and a more prosperous society for Americans.

AI won’t just make workers more productive in their old jobs. It is transforming jobs and powering the creation of new products, services, and entire industries. By handling repetitive tasks and analyzing vast data troves, AI frees up human creativity to focus on breakthroughs and solve complex problems. In many cases, using AI benefits lower skilled and new workers most, leveling them up faster.7 Similarly, AI can empower smaller teams of entrepreneurs and small businesses to improve efficiency and expand their capacity, bringing some of the benefits of scale to smaller organizations.8 The result is a more dynamic, competitive economy–one where American companies lead in cutting-edge fields and generate high-quality jobs for the future.

Some of the most tangible and exciting benefits of AI are emerging in critical everyday sectors like healthcare and transportation. Our public opinion research demonstrates that healthcare is the most compelling use case for AI9, and for good reason, because recent exciting AI developments are difficult to quantify economically but promise enormous impact:

- AI-powered tools are already saving lives by assisting in rare disease treatment where investment is scarce.10 Of the rare diseases that together affect tens of millions of Americans, more than 90% have no approved treatments. Because of the small number of affected individuals (some diseases might affect just a few dozen individuals per year) pharmaceutical companies lack the financial incentives to research treatments. However, researchers are successfully using AI tools to identify already approved drugs that could be used to treat such patients.11

- Researchers have developed an AI model that detects a common gynecological cancer sooner and more accurately, achieving a 99.26% accuracy rate where existing techniques were about 80% accurate. The same team has tested the methodology successfully on other cancers as well.12

- Machine learning algorithms power brain-computer interfaces that enable individuals with neurological injuries or diseases to speak and move. A recent advance in the technology increased the accuracy of the predicted speech so much that the doctors said, “We can basically call it perfect.”13

Other AI benefits in the healthcare system are more mundane, if easier to estimate monetarily. AI is expected to greatly streamline administrative tasks, which currently make up about 25% of healthcare costs.14 One 2023 paper estimates that the technologies available at that time, if implemented across the healthcare system, could save “$200 billion to $360 billion annually in 2019 dollars, without sacrificing quality and access.”15 In pharmaceuticals and drug discovery experts estimate between $60 billion to $110 billion annually in value from improving the speed and quality of research and development.16

In transportation, AI-powered systems are making travel safer and more efficient – from self-driving vehicles that could reduce traffic accidents by as much as 90%, saving approximately $190 billion per year.17 Companies such as UPS are already using older AI-powered smart logistics that cut fuel use and delivery times, saving tens of millions of gallons of fuel per year.18 These advances not only boost productivity in each sector but also enhance the quality of life for millions of Americans.

As we look ahead, embracing AI’s transformative potential is key to sustaining American economic leadership. Just as past innovations like electricity and the internet fueled U.S. prosperity, AI can drive a new era of growth and help secure our position as the world’s leading economy. By investing in AI research, talent, and infrastructure – and fostering a pro-innovation policy environment – we ensure that the benefits of AI (higher productivity, healthier people, new jobs, and better services) accrue here at home. With an optimistic, forward-looking approach, the United States can lead the AI revolution, reaping the rewards of this transformative technology for decades to come.

Achieving American AI Dominance

The Trump administration is actively pursuing a strategy for American AI dominance – where the U.S. continues to lead the world in AI development and deployment – focused on removing barriers to American innovators. Executive Order 14179, titled “Removing Barriers to American Leadership in Artificial Intelligence,” set the policy of the U.S. as “to sustain and enhance America’s global AI dominance in order to promote human flourishing, economic competitiveness, and national security.”19

Vice President J.D. Vance has filled out that agenda in several speeches, indicating that the administration is focused on getting government out of the way. He explained to an international gathering at the Paris AI Summit that “[W]e believe that excessive regulation of the … AI sector could kill a transformative industry just as it’s taking off, and we’ll make every effort to encourage [pro-growth] AI policies.”20 He went on to recognize that U.S. leads in AI because of this light-touch approach:

“[T]he development of cutting-edge AI in the U.S. is no accident. By preserving an open regulatory environment, we’ve encouraged American innovators to experiment and to make unparalleled R and D investments.”21

And he specifically noted that “[W]ith the president’s recent executive order on AI, we’re developing an AI Action Plan that avoids an overly precautionary regulatory regime while ensuring that all Americans benefit from the technology and its transformative potential.”22 (emphasis added)

In that same speech, Vice President Vance also emphasized the important role of good energy policy in AI dominance, noting “There’s no issue we worry about more than regulation when it comes to energy” and “AI cannot take off unless the world builds the energy infrastructure to support it.”

This optimistic and ambitious attitude toward AI innovation is a great launching pad for the U.S. AI moonshot effort. But there is much work to be done.

The Software/Hardware Divide:

Regulatory Challenges to America’s AI Moonshot

A U.S. AI moonshot requires cutting-edge software and robust infrastructure. Both need supportive policy environments and action at the Federal level, but the challenges facing them are very different.

Improving the Software Regulatory Status Quo

The U.S. software regulatory environment is light touch, sector specific, but increasingly chaotic. AI innovation has blossomed in this permissionless environment, but that environment is under threat.

American AI development benefits from a light touch approach

U.S. Software development generally and AI development specifically are currently governed through a combination of tech-agnostic laws on fraud, civil rights, unfair practices, or unsafe products. Tort law such as negligence and product liability cases provide court-based remedies for other harms.

The U.S. has no “Federal Computing Agency.” For constitutional, legal, and pragmatic reasons, Congress may only delegate regulatory authority when it provides an intelligible principle to guide agency action. But for general purpose technologies with economy-wide applications, any agency sufficiently broad in authority would lack an intelligible principle; it would have to be a mini-Congress, regulating a wide swath of the economy with less democratic input or accountability than the legislative branch. Moreover, because courts have determined that code is speech (in part because it communicates and generates information), government controls on software raise First Amendment concerns.

Thus, rather than regulating all software through a single agency, the U.S. indirectly regulates some software by regulating industries, such as medical devices, aviation, and finance, which happen to use software. Such laws and regulations typically require certain outcomes (such as safe medical devices) or prohibit certain kinds of conduct (such as discrimination), regardless of the tools or techniques involved. For example, if a credit provider’s decisions create an unlawful disparate treatment or disparate impact, it violates the Equal Credit Opportunity Act, regardless of whether the denial was done by a person, an automated system, or “AI”. There are parallel laws, regulations, and agencies at the federal and state level governing high impact areas like hiring, credit, criminal justice, and critical infrastructure.

When software academics, researchers, and practitioners need to coordinate on technical issues, they have used non-governmental standard-setting organizations like the IEEE or the World Wide Web Consortium.

This governance structure has served the U.S. well, and it would well serve the new AI wave of software. Our permissionless approach has enabled software to flow into a wide range of uses across all industries, while still dealing with issues when they arise. American AI dominance requires widespread deployment and adoption. Light touch regulation will help AI and its benefits to spread through the American economy.

The growing challenge of state legislation

There is, however, a growing challenge. More than nine hundred state bills to regulate AI have been introduced across the country since January 1, 2025.23 These bills seek a wide range of different types of requirements. They range from helpful and targeted on state concerns to broad and onerous regulation of the entire industry. Other state AI bills, while well-intentioned, do not help or may actively undermine the ability of the U.S. to capitalize on the promise of AI. And in any case, a patchwork of parochial regulation is inconsistent with fostering AI as an urgent national priority.

However, the laboratories of the states have produced excellent pro-innovation approaches as well.

The Utah AI Act and H.B. 452

Utah’s AI Act stands out as a thoughtful, innovation-friendly approach to AI accountability.24 It embodies a balanced, light-touch approach that ensures existing law applies to AI uses while providing a clear path to building legislative solutions for any gaps that might develop.

Rather than creating an entirely new regulatory regime for AI, the Utah AI Act extends traditional consumer protection laws to cover deceptive or fraudulent uses of AI systems. Under this model, companies are required to disclose when an AI system directly interacts with consumers. The law avoids an exhaustive pre-deployment review process; instead, it makes clear that there is no AI exception to the law. If an AI system is used to deceive or defraud, the responsible entity will be held liable under the same statutes. This ensures that businesses remain accountable while still allowing for flexible AI development.

The Utah AI Act also establishes an Office of Artificial Intelligence Policy (OAIP), which among other things oversees the AI Learning Laboratory Program. The Learning Lab is a structured environment where businesses engage with AI policy discussions and regulatory testing, in conjunction with regulatory mitigation programs that allow businesses to test AI applications without facing immediate restrictive mandates. Such an environment minimizes upfront compliance costs, accelerates market entry, and ultimately creates a fertile ground for innovation.

In November 2024, the Utah OAIP signed its first regulatory mitigation agreement with ElizaChat, developer of an AI-powered mental wellness app for teenagers. The app helps students improve relationships, manage emotions, and set personal goals. The mental health of teenagers is of intense interest and importance, and we need innovative new tools, but the legal environment can be unclear.

The mitigation agreement balanced these needs. ElizaChat committed to best practices in data privacy, mental health promotion, and adult supervision in sensitive scenarios. The agreement required protocols for escalating severe cases to trusted adults and allows a 30-day period to address (through referrals to clinicians) situations where interactions may inadvertently constitute mental health therapy, a regulated practice.

This collaborative approach showcases Utah’s AI Act as an effective, supportive, yet accountable approach that encourages innovation in AI, particularly within the critical healthcare and mental wellness areas.

OAIP’s experience with ElizaChat also informed HB 452, the Artificial Intelligence Applications Relating to Mental Health Act, which was signed by the Governor in March of this year.25 This legislation focuses on mental health AI to minimize compliance burdens. It defines “mental health chatbots,” regulates the use of personal information, mandates clear labeling, sets advertising guidelines, and outlines conditions for an affirmative defense.

The law recognizes that AI mental health services offer affordable, accessible alternatives to human therapists. To qualify for affirmative defense, providers must involve licensed therapists in chatbot development and monitoring, ensuring alignment with clinical best practices. The standard for risk assessment requires chatbots to pose no greater risk than licensed therapists, distinguishing HB 452 from some laws that require AI to be superior to human standards. The legislation effectively addresses what the Utah legislature saw as statutory gaps, safeguards consumers, and supports innovation without excessive restrictions.

Utah’s approach to AI regulation is a terrific example for legislators at the state and federal level. The Utah AI Act also inspired the American Legislative Exchange Council (ALEC) Model State Artificial Intelligence Act.26

Other work to improve the AI regulatory Environment

Utah is a leader but is not alone in pro-innovation AI approaches. Other pro-innovation examples include Montana’s just-passed SB 212 which would create a “Right to Compute”27 along with New Hampshire’s similar proposal to amend the state’s constitution.28 Texas has also introduced HB 3808 based on the ALEC model legislation.29

There are also ways in which Congress could improve the regulatory environment to spur AI-driven economic growth. We discussed key recommendations in our recent response to the Office of Science and Technology Policy’s request for comment on the development of an Artificial Intelligence Action Plan, including:30

- Prevent Conflicting State Regulations: Enact federal laws—including negative liability, safe harbor, and right-to-compute measures—to preempt restrictive or overly burdensome state-level AI regulations.

- Reform Liability Frameworks to Promote AI Innovation: Clarify legal liability distinctions between developers of general-purpose AI models and those deploying them, protecting foundational AI innovation from excessive litigation risks.

- Enhance AI Innovation Through Regulatory Sandboxes: Establish a federal regulatory sandbox enabling supervised yet flexible testing of AI innovations in federally regulated industries.

- Unlock Federal Data for AI Advancement: Mandate federal agencies to inventory, digitize, and openly share unstructured datasets, significantly enhancing resources available for AI training and development.

In short, the U.S.’s existing software regulatory environment currently fosters innovation and supports American AI dominance. But there is critical work to do, both at the state and federal level, to maintain and improve that regulatory environment.

Reforming the US Energy System for an AI Moonshot

While the current U.S. software regulatory environment permits rapid innovation, this is not the case for the energy infrastructure regulatory environment. Our inability to meet AI’s growing energy needs threaten to be a major bottleneck to American AI dominance.

We are, for regulatory reasons, far too slow to 1) build and 2) connect new energy.

Permitting slows and stops new energy sources. The US struggles to build new energy generation because permitting reviews are out of control. Two out of three federal environmental impact statements take longer than the two-year timeline required by statute. Final Environmental Impact Statements issued in 2024 took a median of 2.2 years and an average of 3.8 years. Two out of three permits are issued behind schedule, according to the Council on Environmental Quality’s January report.31 In addition to consuming years of time, these reviews are unnecessarily thousands of pages long.32 This avalanche of paperwork wrapped in red tape has discouraged new energy projects of all kinds and slowed those that have been pursued.

The National Environmental Policy Act (NEPA) creates malincentives that cause these delays. NEPA is a procedural bill. If a project exhaustively identifies every way it may affect the environment, then the proposal passes through NEPA. Combined with the ability of private parties to sue, this incentivizes builders to generate litigation-proof reviews that serve little practical purpose to protect the environment.

The most promising albeit aggressive option is simply to repeal the National Environmental Policy Act and replace it with substantive protections. Short of this there are other promising options. Congress could defang activist NIMBY litigation by shortening the statute of limitations on NEPA-related actions and adding limits on injunctions. Congress could also clarify that agencies need only examine the direct, proximate, and reasonably foreseeable effects of federal actions.

Regulation hinders interconnecting new energy with the electrical grid. When we manage to build new sources of energy, it takes grid operators too long to connect those sources into the grid – again, because of regulation. Consider that after Microsoft announced its plans to restart Three Mile Island in Pennsylvania, Governor Josh Shapiro had to request special treatment to get the plant reconnected.33

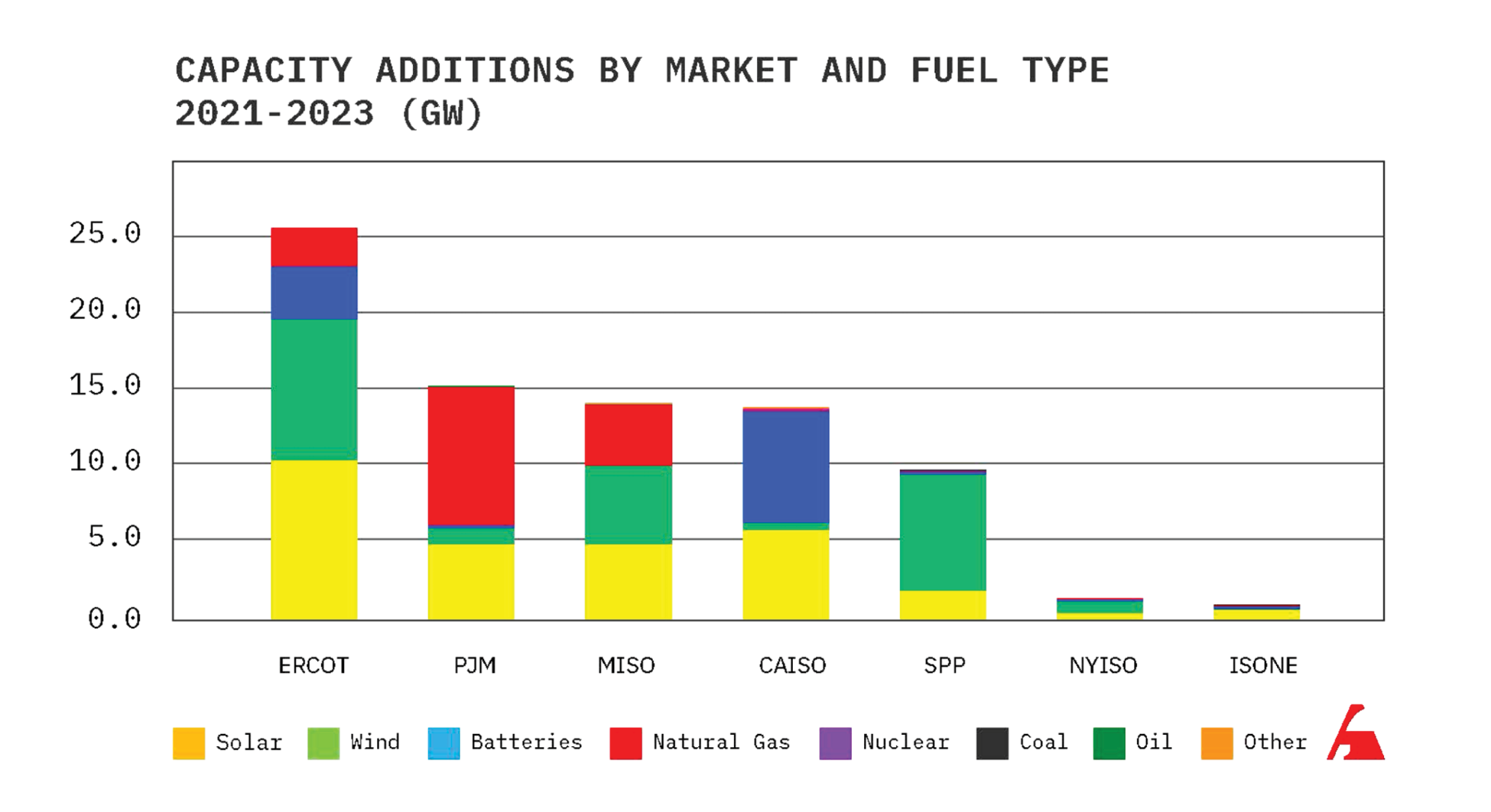

We know that we can connect generators into the grid much faster because the only non-Federal Energy Regulatory Commission major grid operator, Texas, does exactly that. From 2021 to 2023, the Electricity Reliability Council of Texas (ERCOT) added 25 gigawatts, compared to the next highest, PJM in the eastern U.S., at 15 gigawatts. That is, Texas added about 70% more, almost double what other grids added.34

Texas can connect new energy faster for two interrelated reasons. First, Texas has no capacity market, which requires extensive research, complicated modeling, and often expensive upgrades before new generation capacity can be added to the grid.35 Texas operates an energy-only system and relies on a “connect and manage” approach to grid operation. Generators connect more quickly but know that they can be curtailed by the grid operators to maintain the safe operation of the system.36

The second reason is that Texas’s connect and manage approach actually works, unlike other grid operators. Other grid operators nominally have a “shortcut” pathway onto the grid that, in form, looks like Texas’s approach. But research demonstrates that the shortcut takes as much time as the long way around.37 Because of this, outside of Texas, generators largely ignore the shortcut option.

A swelling body of academic research suggests that the existing operations of capacity markets and interconnection need reform to enable faster building and market-driven responses to rising electricity costs or rising capacity costs.38 Texas shows what is possible, and the Federal government should seek to emulate their success in connecting new generation in the rest of the country.

In sum, to foster an AI moonshot, Congress should clear the way both to build and connect new energy infrastructure. A legislative energy infrastructure agenda should:

- Delete the National Environmental Policy Act (NEPA) entirely and replace it with substantive protections.

- Shorten the statute of limitations for judicial review of NEPA decisions to 180 days (at most) instead of the six years found in statute.39

- Require that permitting agencies consider only the direct, proximate, and reasonably foreseeable effects of federal actions.

- Exempt more regular actions from reviews and expand categorical exemptions.

- Allow injunctive relief only in rare cases where (1) they are brought promptly, (2) by parties with direct and preexisting connections to the action’s effects, and (3) where there are risks of imminent and substantial environmental harm, and there is no other equitable remedy available as a matter of law.

- Direct agencies to develop procedures and reforms that allow swift leasing and use of federal lands for all types of energy and mineral development and production.

- Direct the Federal Energy Regulatory Commission to pursue swift and decisive action to speed up interconnection timelines.

These reforms will be challenging to achieve, but the resulting economic benefits from abundant energy production, including for the use of AI, are well worth the effort.

Conclusion

America’s AI moonshot depends on creating and maintaining a regulatory environment that supports both rapid software innovation and robust energy infrastructure development. The permissionless approach that has fostered U.S. leadership in software innovation must be safeguarded against fragmented or overly burdensome state regulation. Utah’s approach offers a valuable model, emphasizing accountability without stifling creativity or entrepreneurial initiative. And there are other important steps Congress should take to strengthen the software regulatory environment.

At the same time, our outdated regulatory approach to energy infrastructure threatens to constrain America’s AI potential. Streamlining permitting processes, reforming or repealing NEPA, and adopting flexible grid-interconnection strategies akin to Texas’s successful model will be crucial steps toward ensuring that our energy infrastructure can meet the rising demands of AI technology.

The path forward is clear: by addressing these dual regulatory challenges proactively and decisively, Congress can ensure that the United States not only maintains but enhances its leadership in artificial intelligence. Such an approach will unlock AI’s full potential—driving economic prosperity, enhancing public well-being, and cementing America’s competitive advantage for generations to come.

Thank you again for the opportunity to testify today, and I look forward to your questions.

1See, e.g., McKinsey & Company, The Economic Potential of Generative AI: The Next Productivity Frontier (June 2023), https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-AI-the-next-productivity-frontier#key-insights (estimating “that generative AI could add the equivalent of $2.6 trillion to $4.4 trillion annually across the 63 use cases we analyzed.”); Goldman Sachs, The Potentially Large Effects of Artificial Intelligence on Economic Growth (March 2023), https://www.gspublishing.com/content/research/en/reports/2023/03/27/d64e052b-0f6e-45d7-967b-d7be35fabd16.html (estimating that “generative AI could raise annual US labor productivity growth by just under 1½pp over a 10-year period following widespread adoption…”).

2 Lapo Fioretti, Carla La Croce, Andrea Siviero, Elisabeth Clemmons, The Global Impact of Artificial Intelligence on the Economy and Jobs: AI Will Steer 3.5% of GDP in 2030. (August 2024), IDC, https://www.idc.com/getdoc.jsp?containerId=prUS52600524.

3 Vanessa Parli, Raymond Perrault, Erik Brynjolfsson, et al, The AI Index 2024 Annual Report at 272 (2024), https://hai.stanford.edu/ai-index/2024-ai-index-report.

4Id.

5Id. at 273.

6 Fabrizio Dell’Acqua, Charles Ayoubi, Hila Lifshitz-Assaf, et al, The Cybernetic Teammate: A Field Experiment on Generative AI Reshaping Teamwork and Expertise (March 21, 2025), https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5188231.

7 Vanessa Parli, Raymond Perrault, Erik Brynjolfsson, et al, The AI Index 2024 Annual Report at 275 (2024), https://hai.stanford.edu/ai-index/2024-ai-index-report (citing studies showing that “AI access appears to narrow the performance gap between low- and high-skilled workers.”).

8 Ivan Mehta, A quarter of startups in YC’s current cohort have codebases that are almost entirely AI-generated (March 6, 2025), TechCrunch, https://techcrunch.com/2025/03/06/a-quarter-of-startups-in-ycs-current-cohort-have-codebases-that-are-almost-entirely-ai-generated/ (“A year ago, [founders] would’ve built their product from scratch – but now 95% of it is built by an AI.”).

9 Jan Zilinsky and Thomas Zeitzoff, Working Paper: Artificial Intelligence, Social Media, and the Politics of Anti-technology at 38 fig.11 (September 2024), https://abundance.institute/articles/politics-of-anti-technology.

10 Kate Morgan, Doctors Told Him He Was Going to Die. Then A.I. Saved His Life (March 20, 2025), The New York Times, https://www.nytimes.com/2025/03/20/well/ai-drug-repurposing.html?smid=tw-share.

11Id.

12AI diagnoses major cancer with near perfect accuracy (March 14, 2025), Charles Darwin University Australia, https://www.cdu.edu.au/news/ai-diagnoses-major-cancer-near-perfect-accuracy.

13 Ingrid Wickelgrid, Brain-to-speech tech good enough for everyday use debuts in a man with ALS (August 14, 2024), Scientific American, https://www.scientificamerican.com/article/brain-to-speech-tech-good-enough-for-everyday-use-debuts-in-a-man-with-als/

14 Nikhil Sahni, George Stein, Rodney Zemmel, and David M. Cutler, The potential impact of artificial intelligence on healthcare spending (October 2023), National Bureau of Economic Research, https://www.nber.org/papers/w30857.

15 Michael Chui et al, The Economic Potential of Generative AI at 30 (June 2023), McKinsey and Co., https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#introduction.

16Id.

17 Bertoncello, M. and D. Wee, Ten ways autonomous driving could redefine the automotive world (2015), McKinsey & Company,http://www.mckinsey.com/industries/automotive-and-assembly/our-insights/ten-ways-autonomous-driving-could-redefine-the-automotive-world

18AI Case Study: UPS saves over 10 million gallons of fuel and up to $400m in costs annually with advanced telematics and analysis, https://www.bestpractice.ai/ai-case-study-best-practice/ups_saves_over_10_million_gallons_of_fuel_and_up_to_%24400m_in_costs_annually_with_advanced_telematics_and_analysis.

19 Donald J. Trump, Removing Barriers to American Leadership in Artificial Intelligence (January 31, 2025), Exec. Order No. 14179, 90 Fed. Reg. 8741, https://www.federalregister.gov/documents/2025/01/31/2025-02172/removing-barriers-to-american-leadership-in-artificial-intelligence.

20 Remarks by Vice President Vance at the Artificial Intelligence Action Summit (February 11, 2025), https://www.whitehouse.gov/remarks/2025/02/remarks-by-vice-president-vance-at-the-artificial-intelligence-action-summit/.

21Id.

22Id.

23 MultiState.ai, Artificial Intelligence (AI) Legislation, https://www.multistate.ai/artificial-intelligence-ai-legislation.

24 Utah S.B. 149, Artificial Intelligence Amendments (signed on March 13, 2024), 2024 Utah General Assembly, https://le.utah.gov/~2024/bills/static/SB0149.html.

25 Utah H.B. 452, Artificial Intelligence Amendments (signed on March 25, 2025), 2025 Utah General Assembly, https://le.utah.gov/~2025/bills/static/HB0452.html.

26 American Legislative Exchange Council, Model State Artificial Intelligence Act, https://alec.org/model-policy/model-state-artificial-intelligence-act/

27 Montana SB 212, Creating the Right to Compute Act and requiring shutdowns of AI controlled critical infrastructure (sent to enrolling March 28, 2025), https://bills.legmt.gov/#/laws/bill/2/LC0292.

28 New Hampshire CACR6, relating to the right to compute. Providing that the right of individuals to use computation resources shall not be infringed (considered in executive session March 5, 2025), https://gc.nh.gov/house/legislation/billinfo.aspx?id=828.

29 Texas HB 3808, Relating to the creation of the artificial intelligence advisory council and the establishment of the artificial intelligence learning laboratory (introduced March 5, 2025), https://capitol.texas.gov/BillLookup/History.aspx?LegSess=89R&Bill=HB3808.

30 These recommendations are based on our response to the Office of Science and Technology Policy’s Request for Comment on the Development of an Artificial Intelligence Action Plan. That document offers more detail about some of these recommendations. See Neil Chilson and Josh Smith, Comment on Request for Information on the Development of an Artificial Intelligence Action Plan (March 2025), https://abundance.institute/articles/development-of-an-AI-action-plan.

31Environmental Impact Statement Timelines (2010-2024) (January, 13, 2025), Council on Environmental Quality, https://ceq.doe.gov/docs/nepa-practice/CEQ_EIS_Timeline_Report_2025-1-13.pdf.

32 Jennifer Morales and Steffen Rigby, NEPA Timelines for Clean Energy Projects: Understanding Delays in Clean Energy Development (March 12, 2025), The Center for Growth and Opportunity, https://www.thecgo.org/research/nepa-timelines-for-clean-energy-projects-understanding-delays-in-clean-energy-development/; Aidan Mackenzie, How NEPA Will Tax Clean Energy (July 25, 2024), Institute for Progress, https://ifp.org/how-nepa-will-tax-clean-energy/.

33 Josh T. Smith, Why Don’t We Build New Nuclear Plants Anymore? (September 26, 2024), Wall Street Journal, https://www.wsj.com/opinion/why-dont-we-build-new-nuclear-plants-anymore-84f8375f?reflink=desktopwebshare_permalink.

34 Tyler H. Norris, Comments of FERC Workshop on Innovations and Efficiencies in Generator Interconnection (September 2024), Federal Energy Regulatory Commission Pre-workshop Comments AD24-9-000, https://nicholasinstitute.duke.edu/publications/comments-ferc-workshop-innovations-efficiencies-generator-interconnection; Tyler H. Norris, Beyond FERC Order 2023: Considerations on Deep Interconnection Reform (August 2023), Duke University Nicholas Institute for Energy, Environment, and Sustainability, https://nicholasinstitute.duke.edu/sites/default/files/publications/beyond-ferc-order-2023-considerations-deep-interconnection-reform.pdf.

35 Todd Aagaard and Andrew N. Kleit, Electricity Capacity Markets (2022), Cambridge University Press, ISBN: 9781108779159.

36 Jacob Mays, Generator Interconnection, Network Expansion, and Energy Transition (December 2023), IEEE Transactions on Energy Markets, Policy and Regulation 1, no. 4: 410–19, ISBN: 2771-9626; Josh T. Smith, Is All This Red Tape Really to Protect Incumbents? (May 3, 2024), Powering Spaceship Earth, https://poweringspaceshipearth.substack.com/p/is-all-this-red-tape-really-to-protect.

37 Tyler H. Norris, Beyond FERC Order 2023: Considerations on Deep Interconnection Reform (August 2023), Duke University Nicholas Institute for Energy, Environment, and Sustainability, https://nicholasinstitute.duke.edu/sites/default/files/publications/beyond-ferc-order-2023-considerations-deep-interconnection-reform.pdf; Joseph Rand et al., Characteristics of Power Plants Seeking Transmission Interconnection As of the End of 2023 (April 2024), Lawrence Berkeley National Laboratory, https://emp.lbl.gov/queues.

38 Todd Aagaard and Andrew N. Kleit, Electricity Capacity Markets (2022), Cambridge University Press, ISBN: 9781108779159.; Todd S Aagaard and Andrew N Kleit, The Complexity Dilemma in Policy Market Design (2019) Duke Environmental Law & Policy Forum, https://scholarship.law.duke.edu/delpf/vol30/iss1/1; Todd Aagaard and Andrew Kleit, Why Capacity Market Prices Are Too High (April 1, 2022), Utilities Policy 75: https://doi.org/10.1016/j.jup.2022.101335; Molly Robertson, Karen Palmer, and Todd Aagaard, Reforming Resource Adequacy Practices and Ensuring Reliability in the Clean Energy Transition (May 2023), Resources for the Future, https://www.rff.org/publications/reports/reforming-resource-adequacy-practices-and-ensuring-reliability-in-the-clean-energy-transition/.

39 Mark C. Rutzick, A Long and Winding Road: How the National Environmental Policy Act Has Become the Most Expensive and Least Effective Environmental Law in the History of the United States, and How to Fix It (October 16, 2018), The Federalist Society,https://rtp.fedsoc.org/paper/national-environmental-policy-act/.